Nvidia GTC 2023 Keynote Live Blog

refresh

Jensen begins by discussing the growing demands of the modern digital world, with growth exceeding the speed of Moore’s Law. He will hear from leaders in the AI industry, robotics, self-driving cars, manufacturing, science and more.

“GTC’s purpose is to inspire the world to the art of accelerated computing possibilities and to celebrate the achievements of the scientists who use it.”

And now there’s the latest “I Am AI” intro video, a GTC staple of the past few years. The underlying musical composition doesn’t seem to change at all, but it’s updated every time with a new segment.

Not surprisingly, Jensen has started an in-depth article on ChatGPT and OpenAI. One of his most famous Deep Learning revolutions was his AlexNet in 2012. This is an image recognition algorithm that requires 262 petaflops worth of computation. One of those researchers is now at his OpenAI, and training GPT-3 required him 323 zetaflops worth of computation. That’s more than a million times his computational volume just 10 years later. That is what was sought as the foundation of ChatGPT.

In addition to the computation needed to train models used in deep learning, Nvidia has hundreds of libraries useful for various industries and models. Jensen looks at many of the largest libraries and the companies that use them.

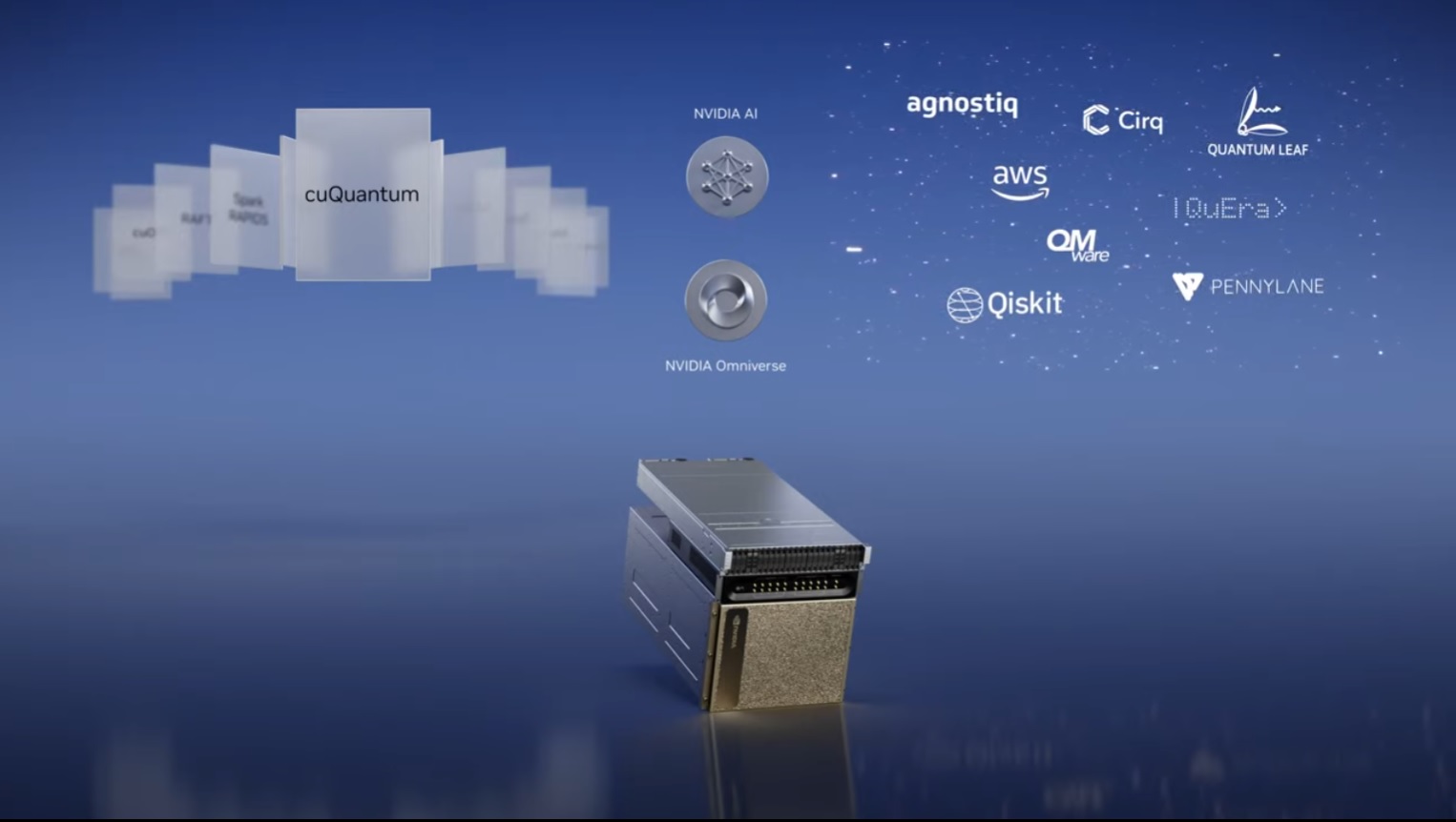

For example, Nvidia’s quantum platform, including cuQuantum, is used to help researchers in the field of quantum computing. People predict that quantum computing will go from theoretical to practical in the next 10 or 20 years (or 3?).

Jensen is currently talking about the traveling salesman problem and the NP-Hard algorithm, which has no efficient solution. This is a problem many businesses in the real world have to deal with, a pickup and delivery problem. Nvidia’s hardware and libraries helped set a new record in calculating the optimal route for this task, and AT&T uses the technology internally.

Lots of discussion about other libraries such as cuOpt, Triton, CV-CUDA (for computer vision), VPF (python video encoding and decoding), medical field, etc. Apparently, Nvidia’s technology has brought the cost of genome sequencing down to $100. (Don’t worry, someone could charge you many times more if they needed your DNA sequenced.)

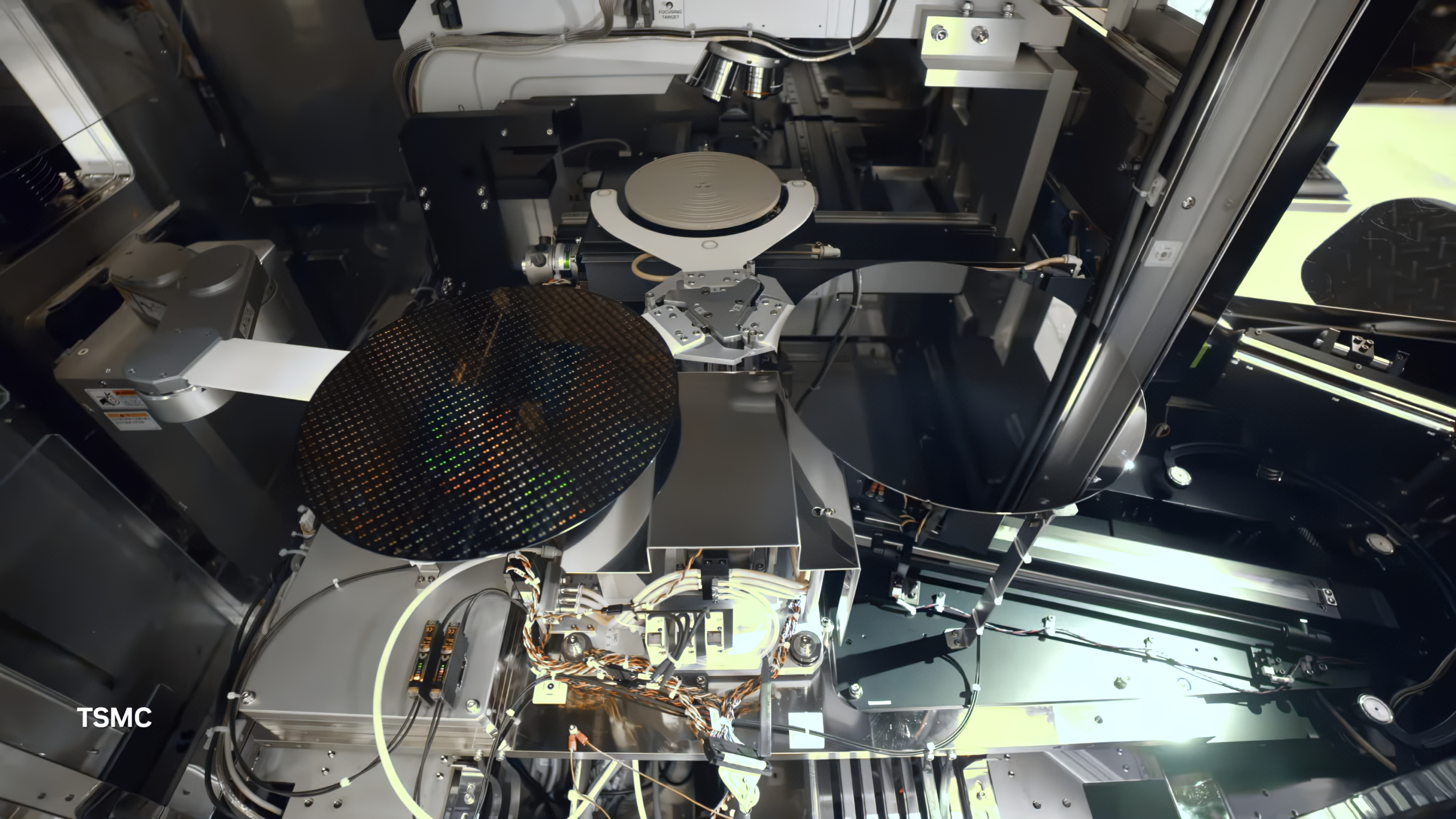

The big one is cuLitho, a new tool that helps optimize one of the major steps in the design of modern processors. Nvidia Computational Lithography has a separate and detailed explanation, but basically creating the patterns and masks used in modern lithography processes is so complex that, according to Nvidia, calculations on just one mask It may take several weeks. cuLitho, a computational lithography library, offers up to 40x performance improvement over currently used tools.

With cuLitho, a single reticle can now be processed in 8 hours instead of 2 weeks previously. It also runs on a 500 DGX system compared to a 40,000 CPU server, reducing power costs by a factor of 9.

Nvidia has been talking about the Hopper H100 for over a year now, and now it’s finally in full production and deployed across a number of data centers, including those of Microsoft Azure, Google, and Oracle. The heart of his latest DGX supercomputer, pictured above, consists of eight of his H100 GPUs and a giant heatsink on his one system.

Naturally, getting your own DGX H100 setup is very costly and the DGX Cloud solution offers them as an on-demand service. Services like ChatGPT, Stable Diffusion, and Dall-E leverage cloud solutions for some of their training, and DGX Cloud aims to open it up to even more people.

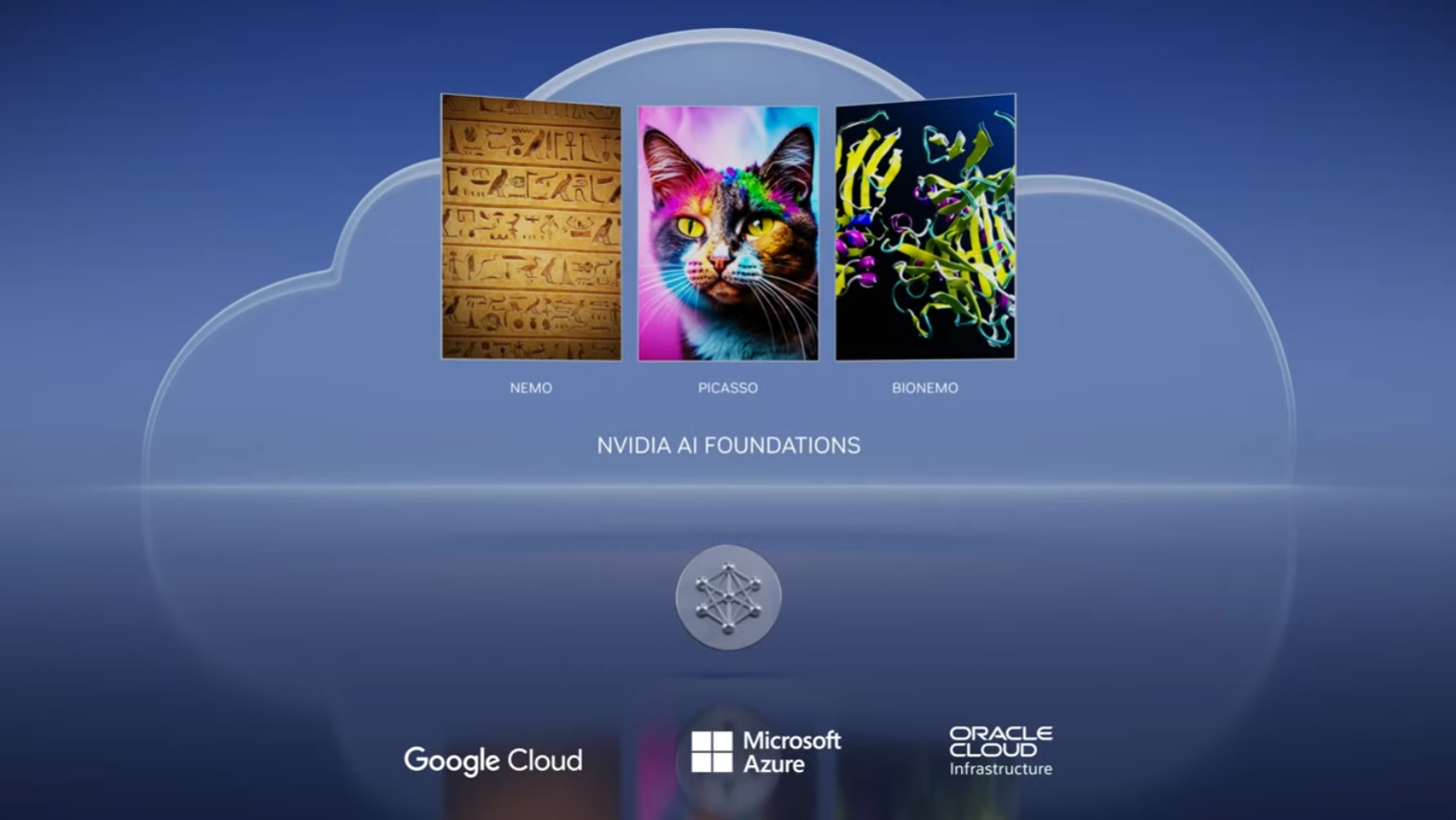

Jensen now talks about how the GPT industry (Generative Pre-trained Transformer) needs a “foundation” equivalent to these models. So what TSMC is doing for the chip manufacturing industry, software and deep learning. To that end, he is announcing the Nvidia AI Foundations.

Customers can work with Nvidia experts to train and create models and keep them updated based on user interaction. These can be completely custom models, such as image generation based on libraries of images you already own. Think of it as Stable Diffusion, built specifically for companies like Adobe, Getty Images, Shutterstock, and more.

And we didn’t just drop a few names in there. Nvidia announced that all three of these companies work with Nvidia’s Picasso tool, using a “responsibly licensed and professional image.”

The Nvidia AI Foundations also have BioNemo, a tool to help the pharmaceutical research and formulating industry.

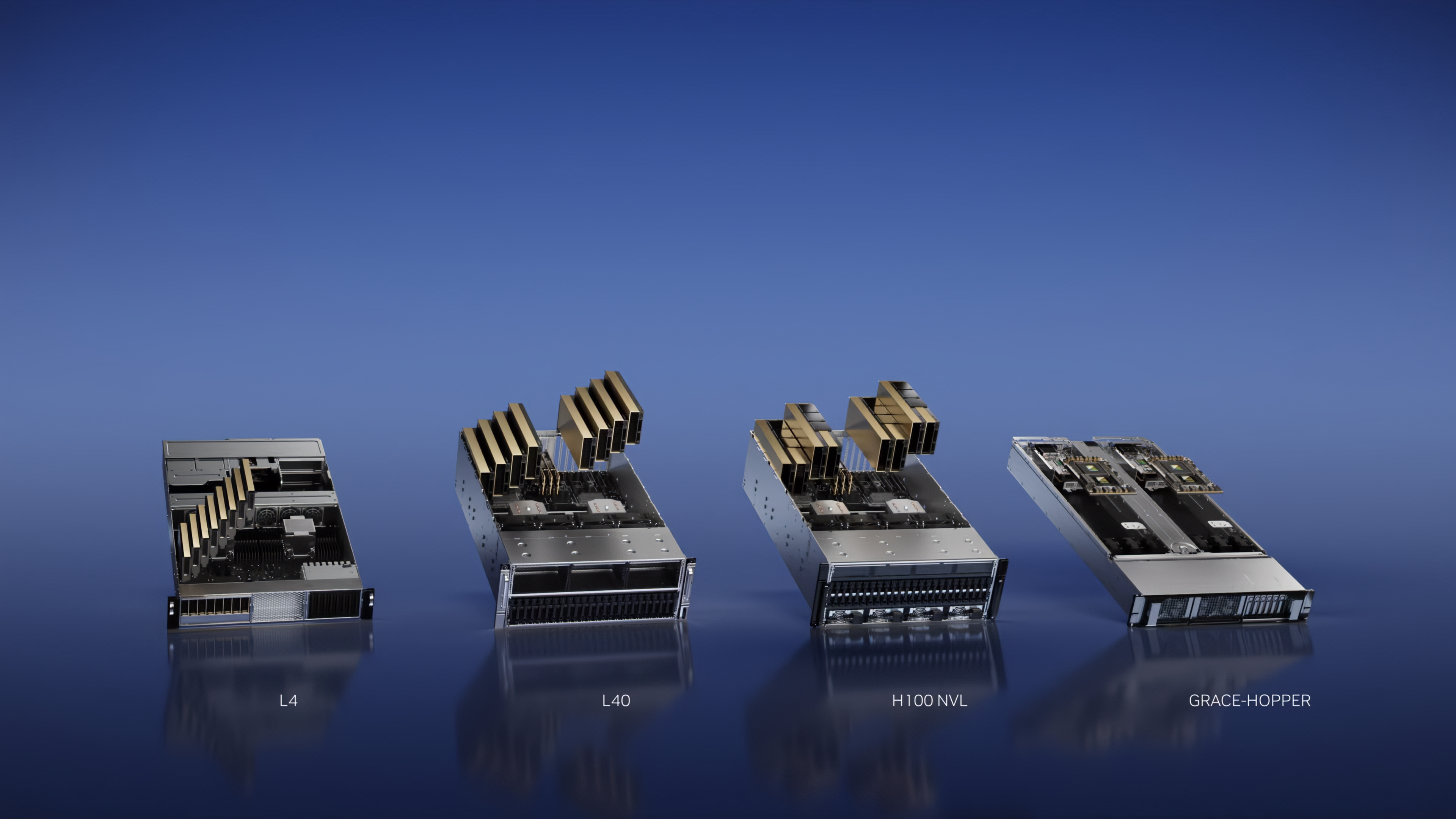

One of the benefits Nvidia offers enterprises is the idea of being able to scale up to the largest installations on a single platform. This is the first we’ve heard of this keynote where Nvidia specifically mentions his Grace-Hopper solution that we’ve heard in the past. Nvidia also announced his new L4, L40 and H100 NVL.

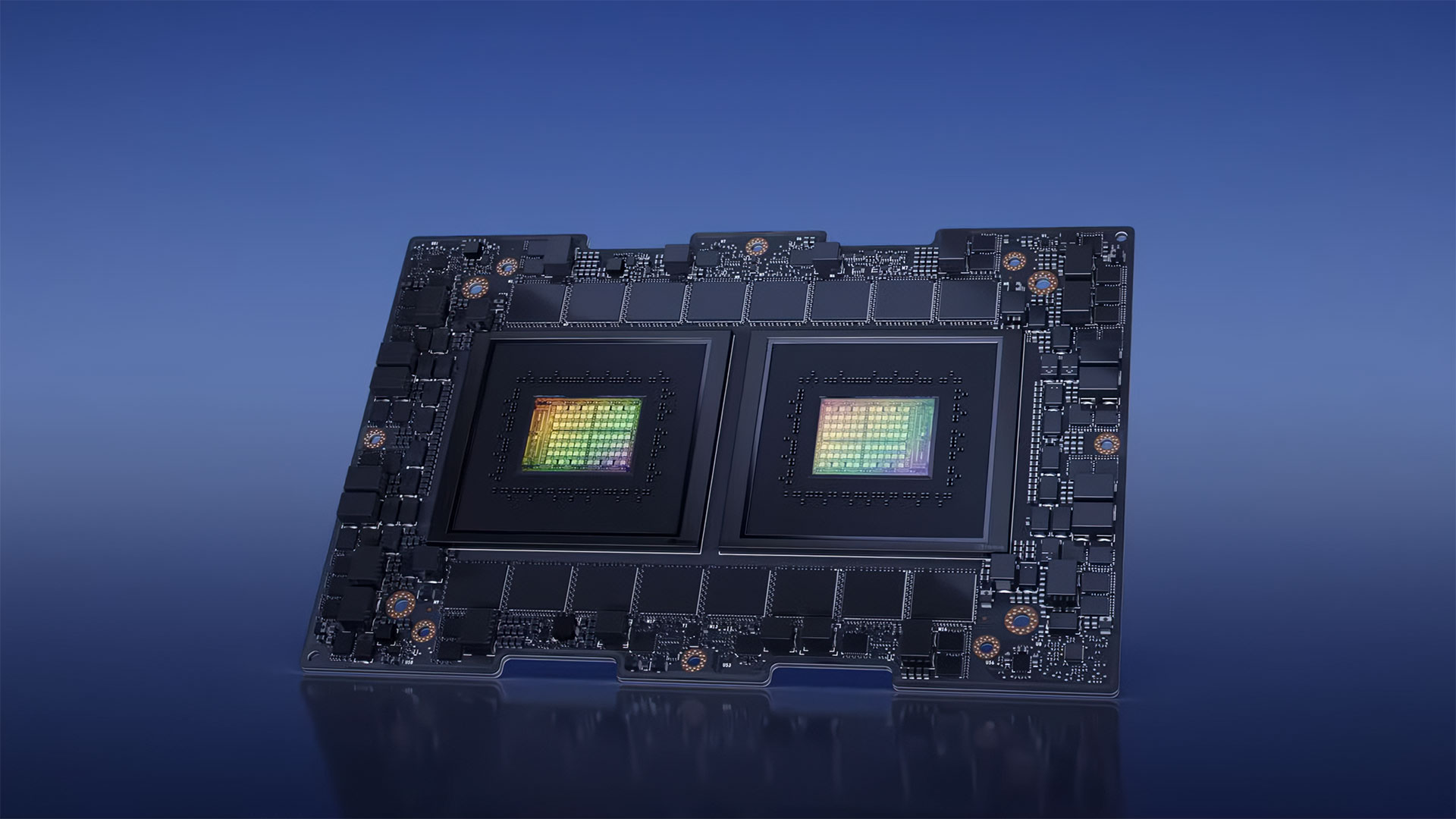

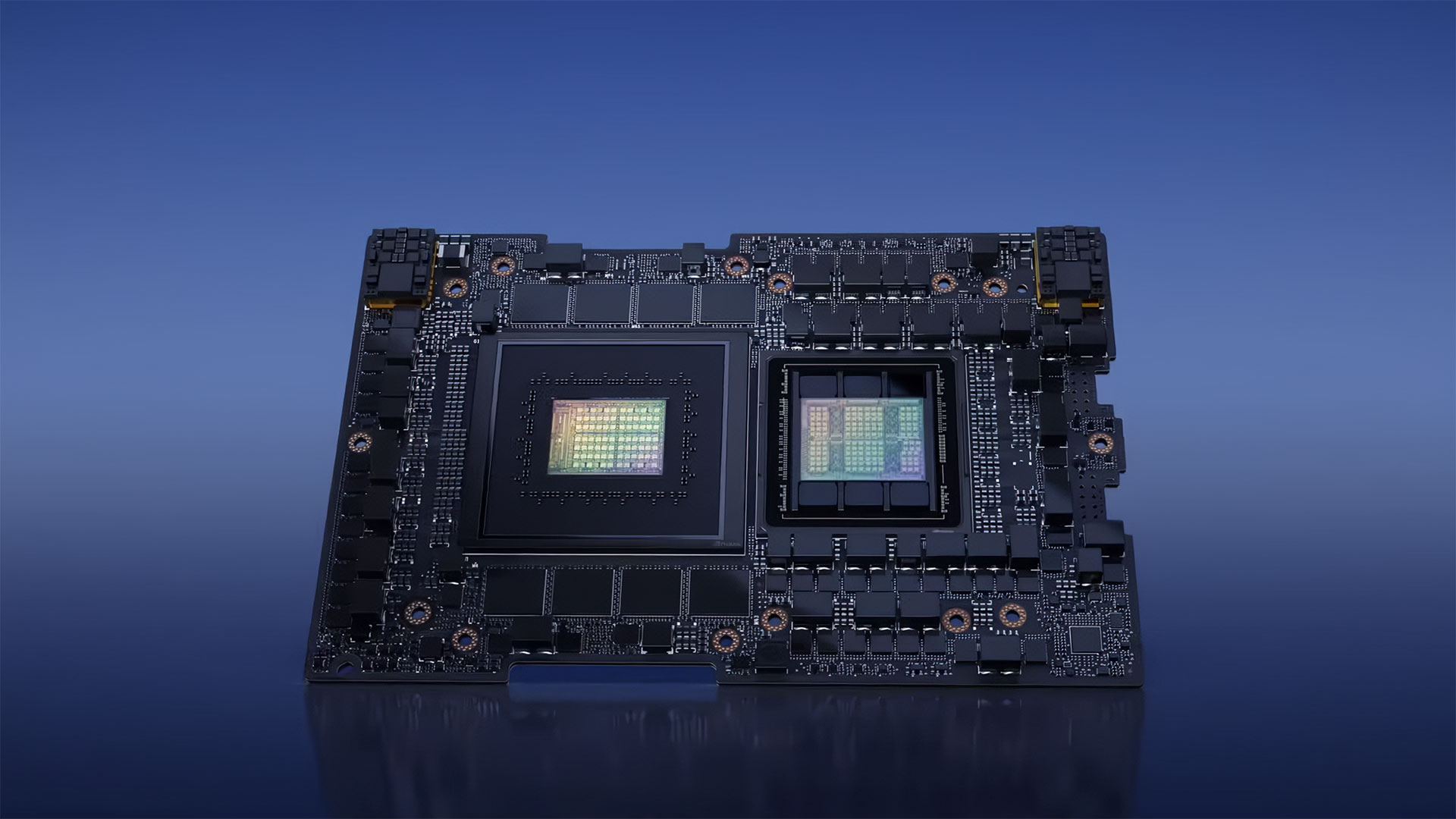

This is a PCI Express solution with two Hopper H100 GPUs linked by NVLink, so it’s pretty cool. Thanks to a total of 188 GB of HBM3 memory, it can single-handedly handle GPT-3 models with 165 billion parameters. (As an example, in a test posted earlier this week of an alternative ChatGPT running locally, even a 24GB RTX 4090 could only handle up to 30 billion parameter models.)

Amazon is talking about the Proteus robot, which was trained on the Nvidia Isaac Sim and is currently deployed in warehouses. This is all thanks to Nvidia Omniverse and related technologies like Replicator, Digital Twins and more. Many other name drops are in the works for those who want to keep track of such things. It’s like going far away. The Isaac Gym also became a hot topic.

Anyway, Omniverse covers a wide range of possibilities. It covers design and engineering, sensor models, system makers, content creation and rendering, robotics, synthetic data and 3D assets, system integrators, service providers, and digital twins. (Yes, it’s all just copied from the slides.) It’s big and does a lot of useful things.

Look, the Racer RTX is here again! I think I missed the release date which was supposed to be last November or something. Again, I got the very demanding Portal RTX instead, so it would be great if Racer RTX had a little more time for optimization etc before it becomes a fully playable game/demo/thing is.

More omniverse (take another shot!), this time using OVX servers from various vendors. These include an Nvidia L40 Ada RTX GPU and his BlueField-3 processor from Nvidia for connectivity.

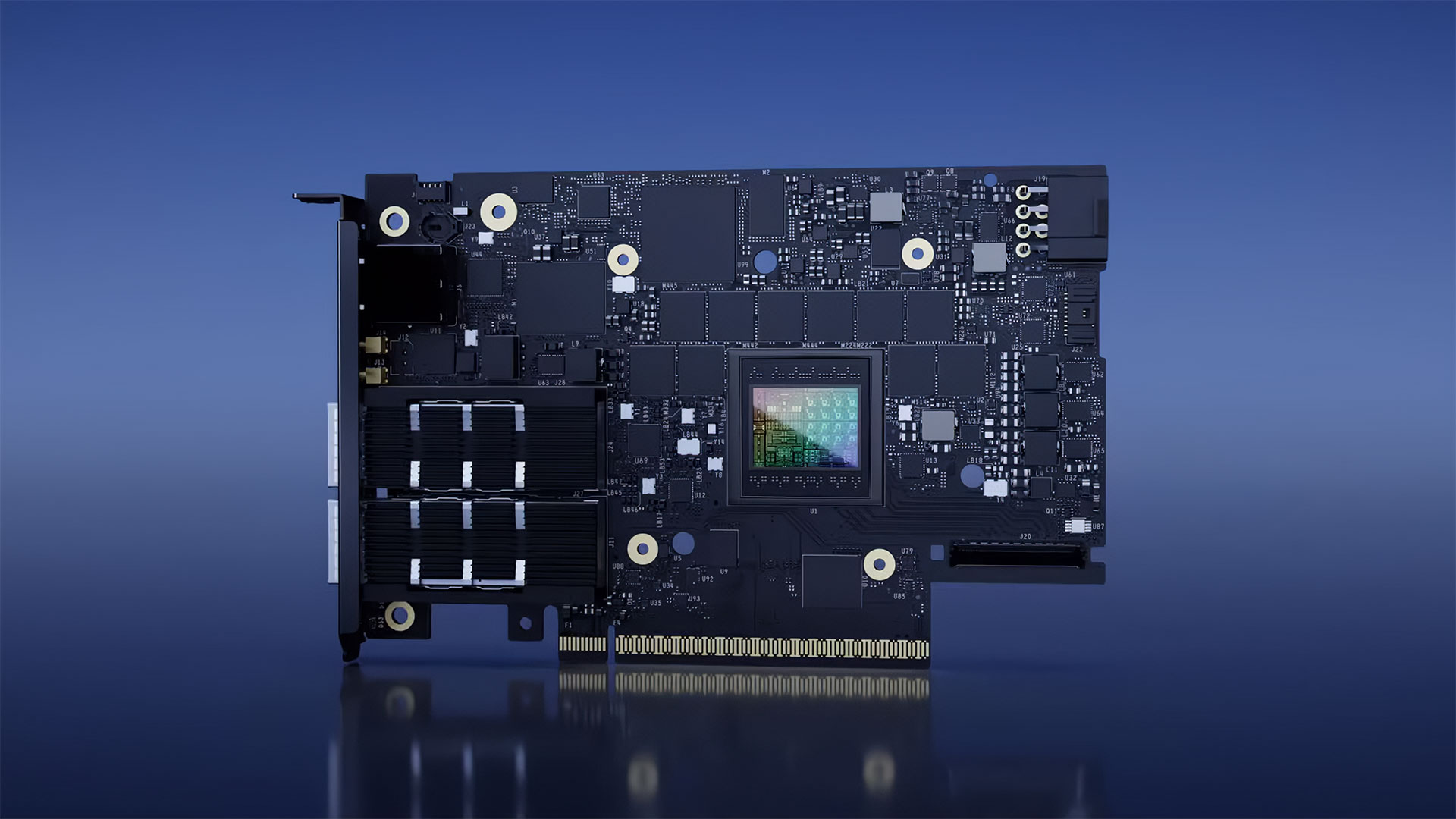

“From March,” we will also see new workstations with Ada RTX GPUs in both desktop and laptop configurations. That is, apparently now. Free photo of dual CPU Grace, Grace-Hopper and BlueField-3.

That concludes this GTC 2023 keynote. Jensen has a variety of announcements to make, and we’ll likely be revealing more in the future as presentations and details emerge.