Intel Data Center and AI Webinar Liveblog: Roadmap, New Chips, and Demos

refresh

Rivera thanked the audience for attending the webinar and also shared a summary of key announcements.

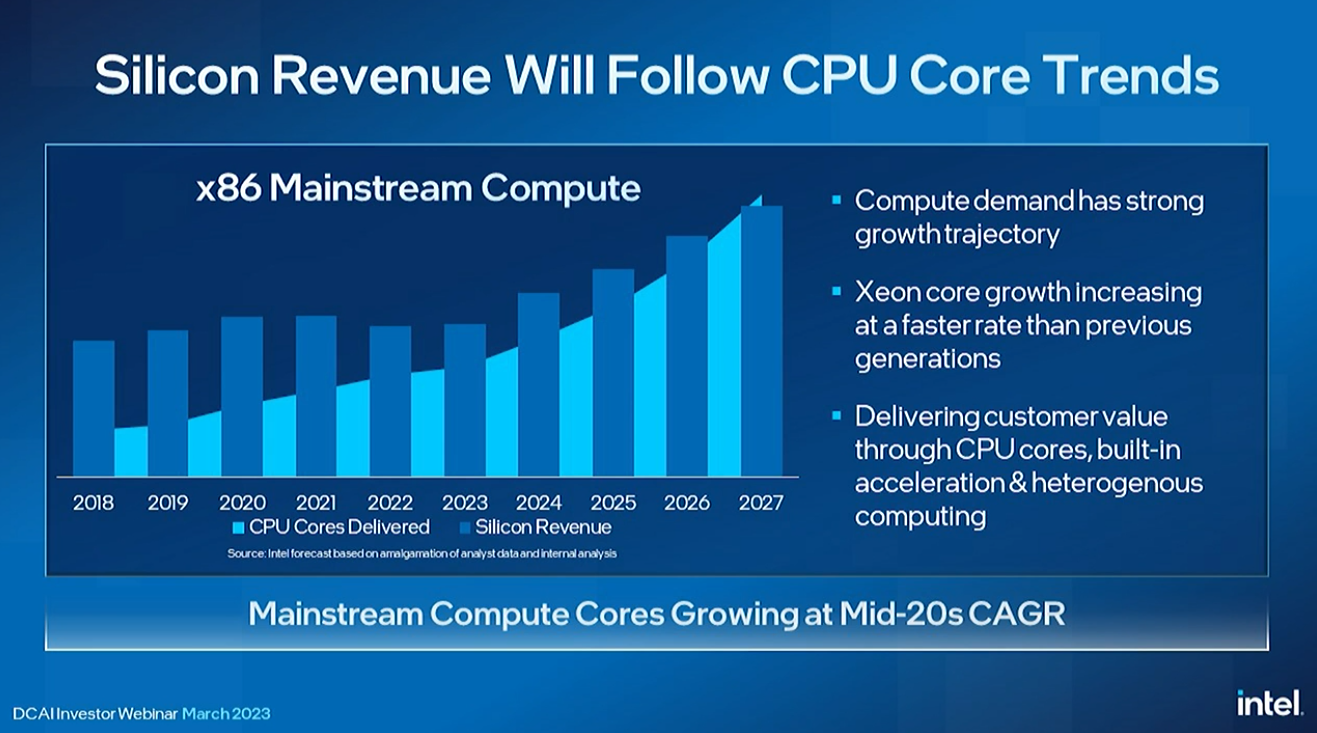

In summary, Intel has announced that its first-generation efficiency Xeon, Sierra Forest, will feature a staggering 144 cores, offering better core density than AMD’s competing 128-core EPYC Bergamo chips. The company also hinted at the chip in a demo. Intel also revealed the first details of the Clearwater Forest. This is the second generation efficiency Xeon debuting in 2025. Intel skipped the 20A process node in favor of the more performant 18A on this new chip. future node.

Intel also showed a 4x performance advantage over Xeon in a 1:1 AI benchmark of two 48-core chips, such as a 1:1 AI benchmark with AMD’s EPYC Genoa, and several such as memory throughput benchmarks. presented a demo. The gen Granite Rapids Xeon delivers a staggering 1.5 TB/s bandwidth on a dual socket server.

As this is an investor event, the company will be conducting a question and answer session focused on the financial aspects of the presentation. We won’t focus on the Q&A section here unless the answer is specifically related to our strength in hardware. If you’re interested in the financial side of the conversation, WEBINAR HERE.

Lavender also outlined the company’s efforts to provide scale and accelerate development through the Intel Developer Cloud. Intel has quadrupled the number of users he has since launching the program in 2021. So he handed the baton back to her Sandra.

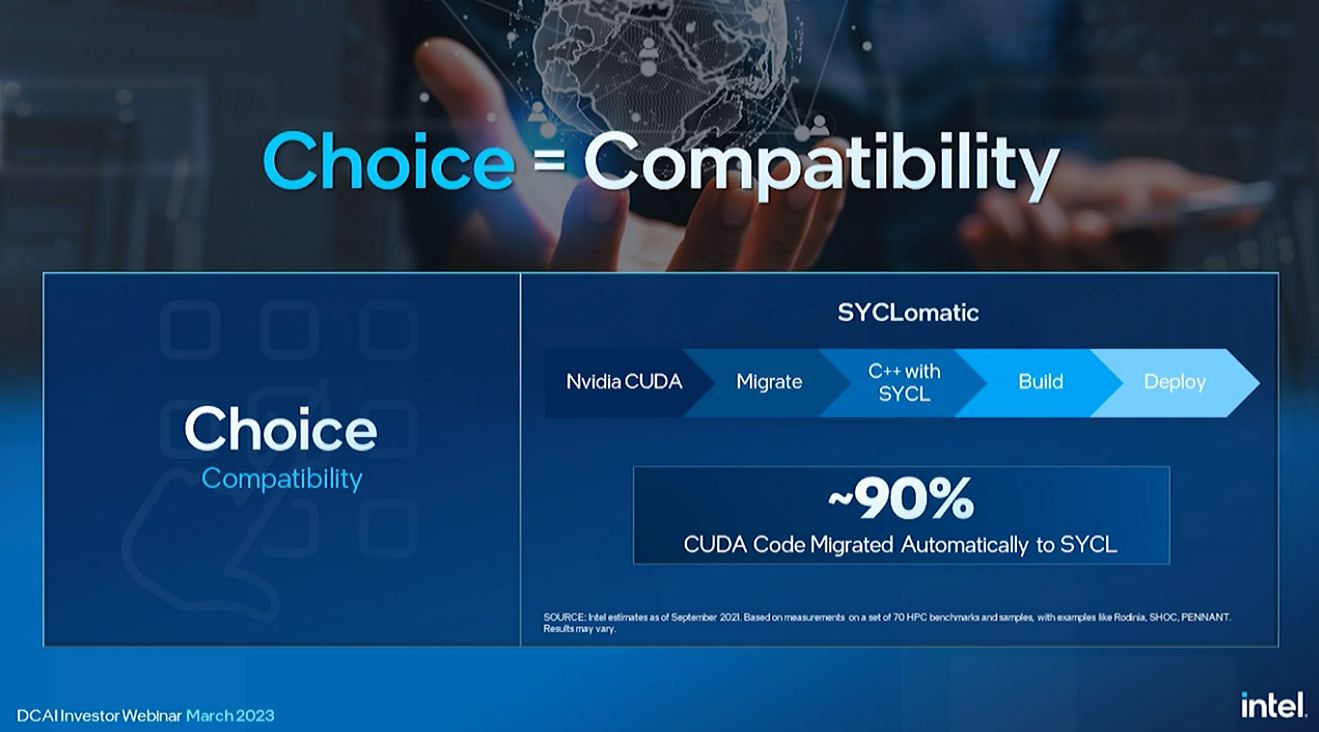

Intel launched SYCLomatic to automatically migrate CUDA code to SYCL.

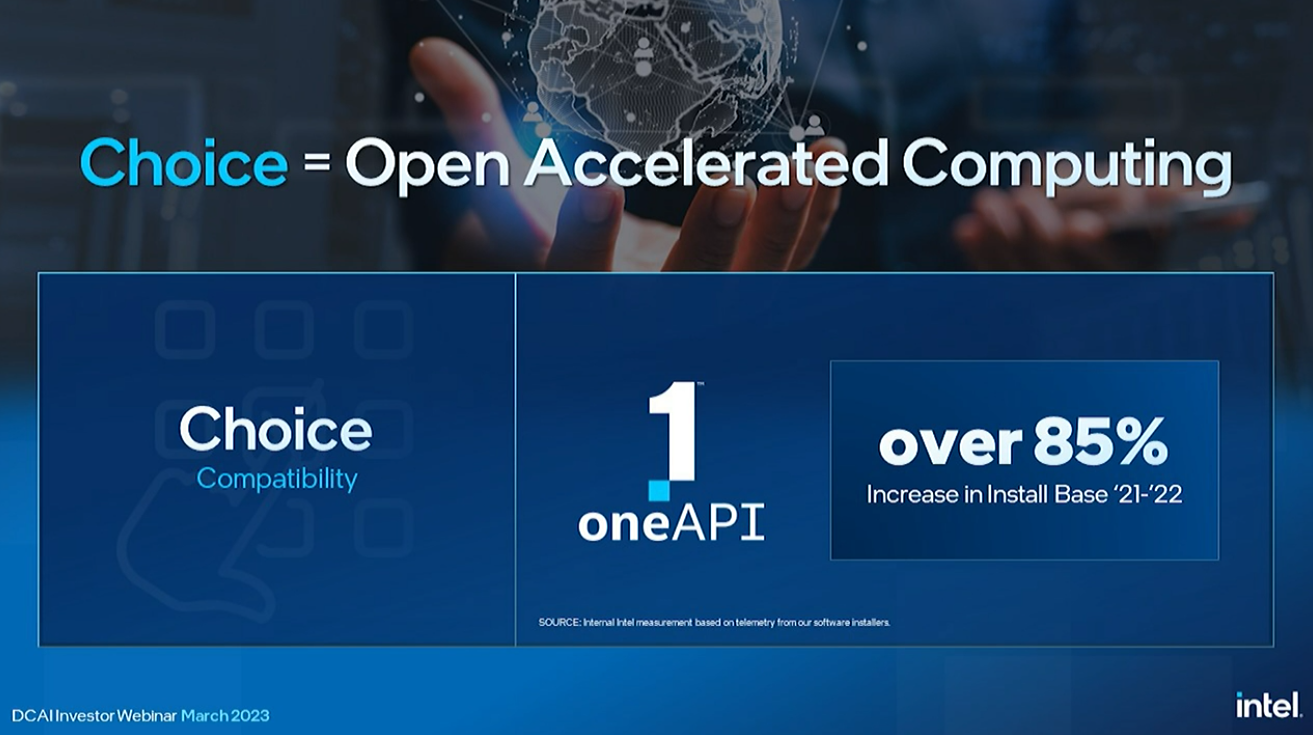

Intel’s commitment to OneAPI continues, with 6.2 million active developers using Intel tools.

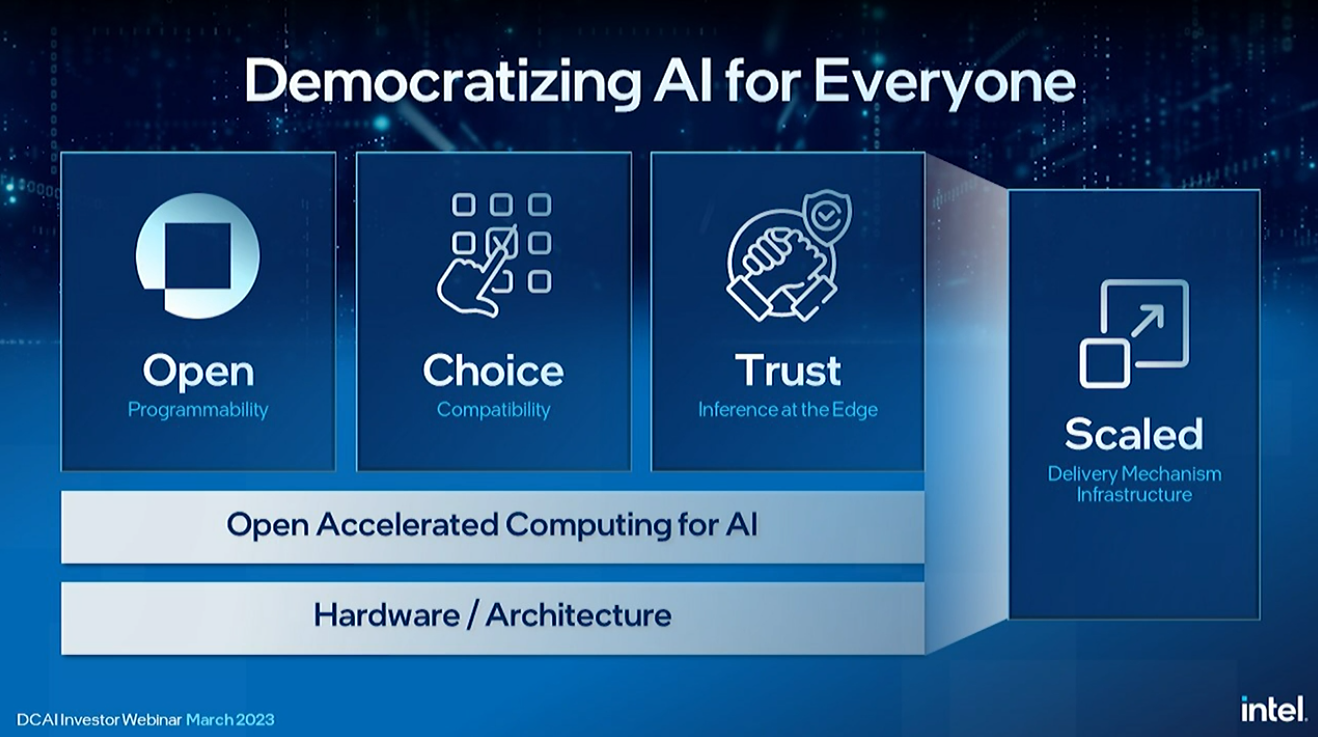

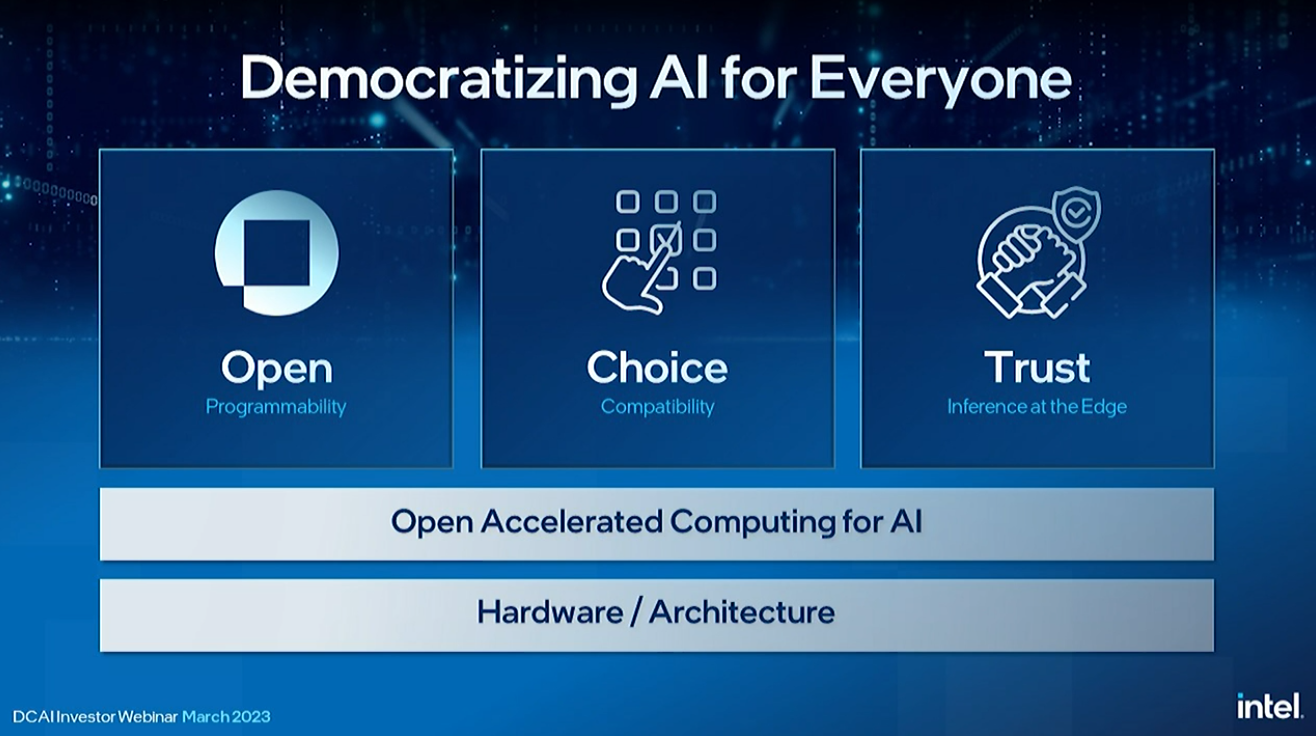

Intel is aiming for an open, multi-vendor approach to offer an alternative to Nvidia’s CUDA.

Intel is also working to build a software ecosystem for AI that rivals Nvidia’s CUDA. This includes adopting an end-to-end approach that includes silicon, software, security, confidentiality, and trust mechanisms at every point in the stack.

Intel SVP and CTO Greg Lavendar joined the webcast to discuss the democratization of AI.

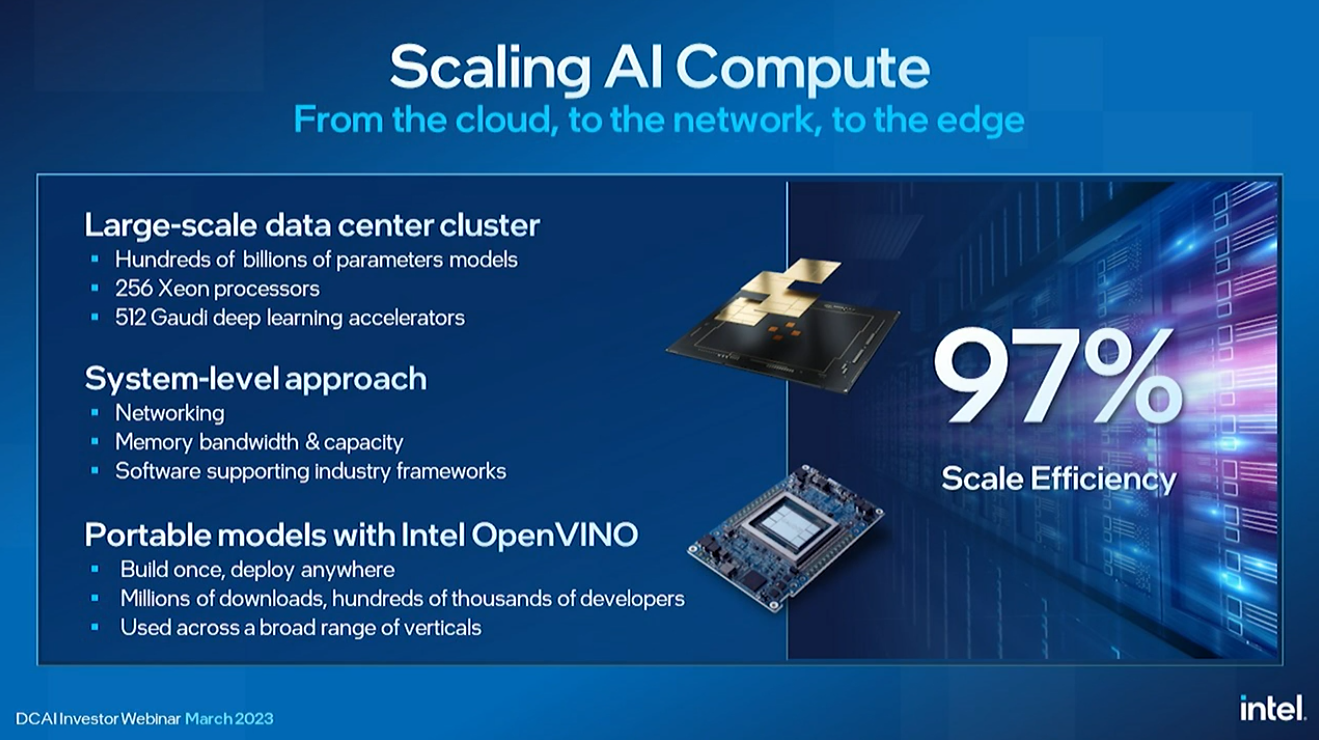

Rivera praised Intel’s 97% scale efficiency on the cluster benchmark.

CPUs are also good for small inference models, but discrete accelerators are important for large models. Intel is serving this market with its Gaudi and Ponte Vecchio GPUs. Hugging Face recently stated that Gaudi gave him three times the performance of the Hugging Face Transformers library.

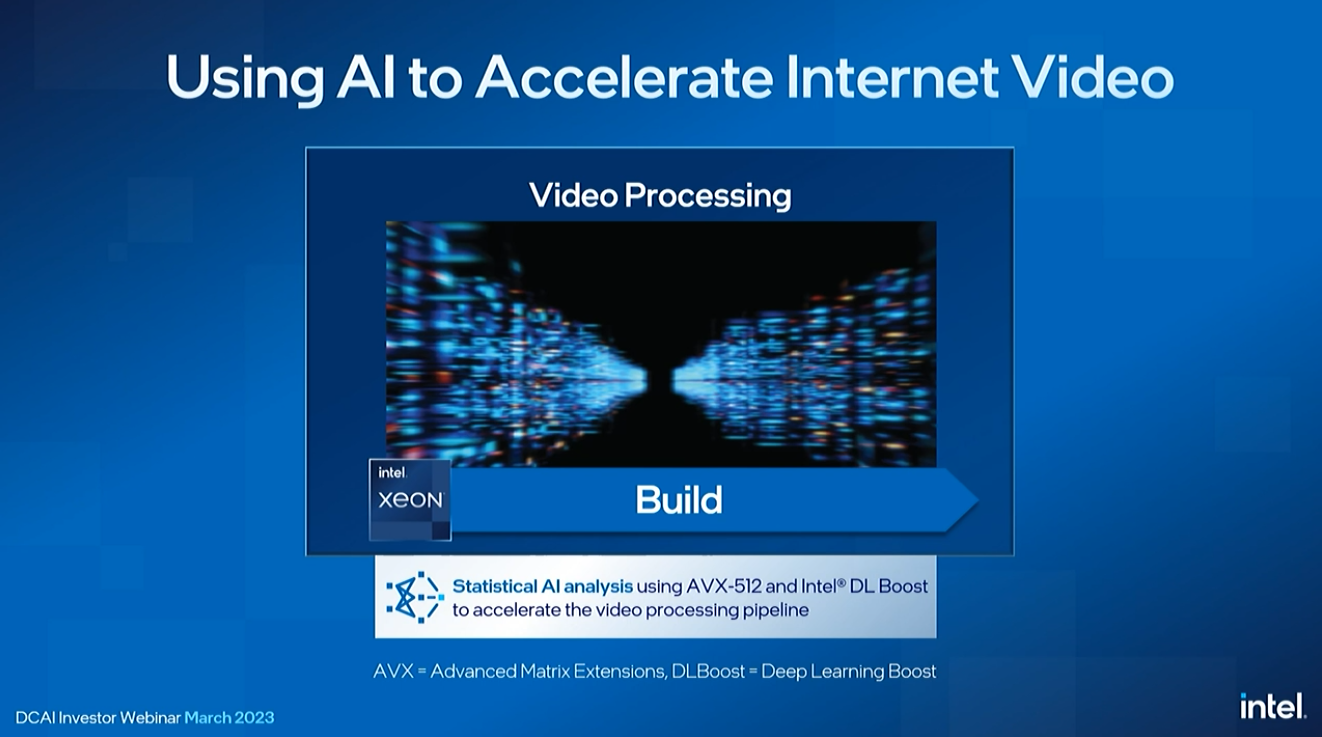

Intel works with content providers to run AI workloads on their video streams. AI-based computing can accelerate, compress, and encrypt data as it travels over networks. All of this runs on a single Sapphire Rapids CPU.

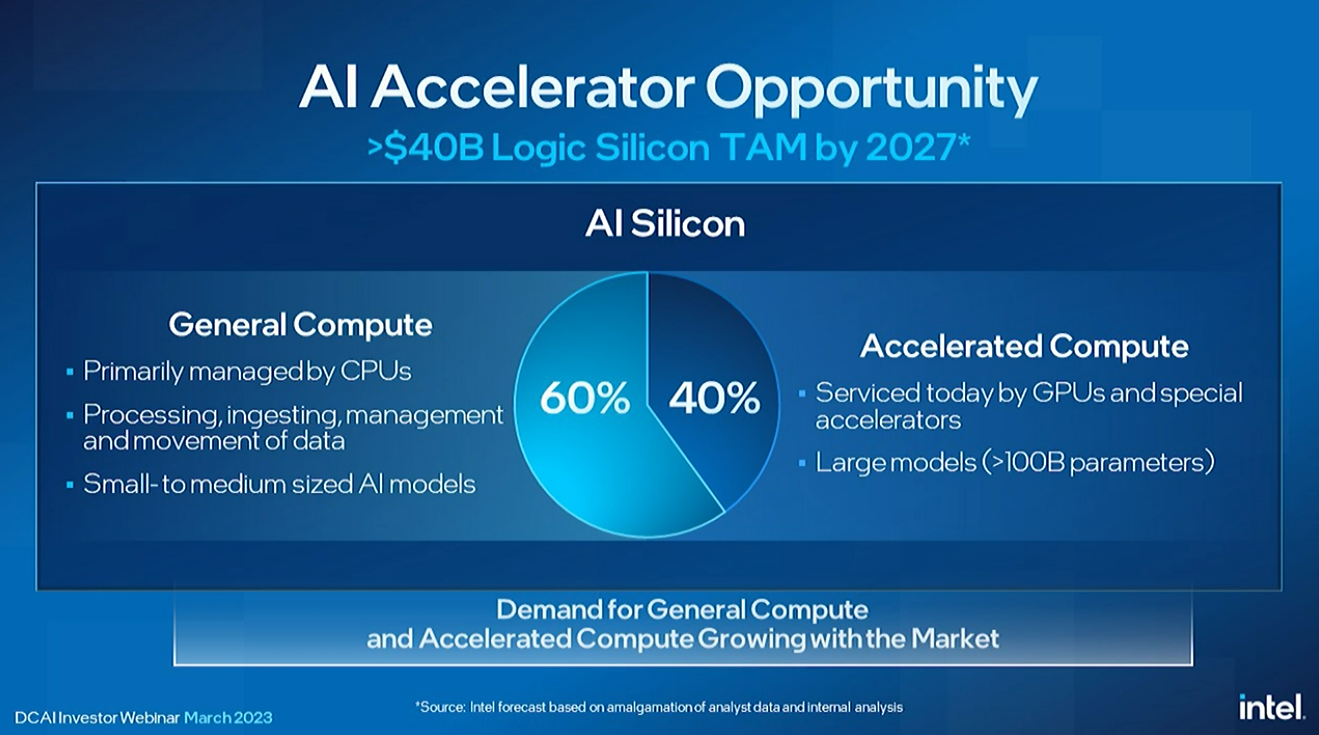

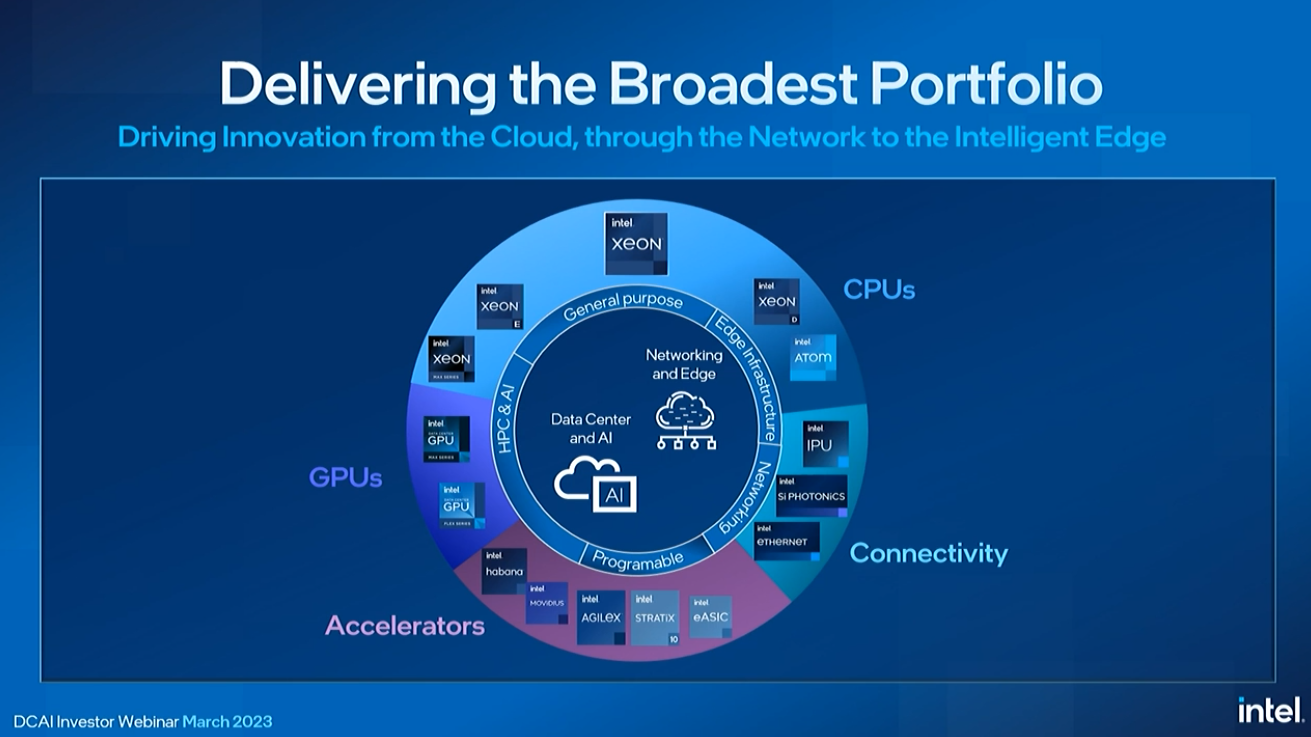

Rivera outlined Intel’s broader efforts in the AI space. Intel predicts that AI workloads will continue to run primarily on CPUs, with 60% of his models running on CPUs, mainly small and medium-sized models. Large models, on the other hand, account for about 40% of the workload and run on GPUs or other custom accelerators.

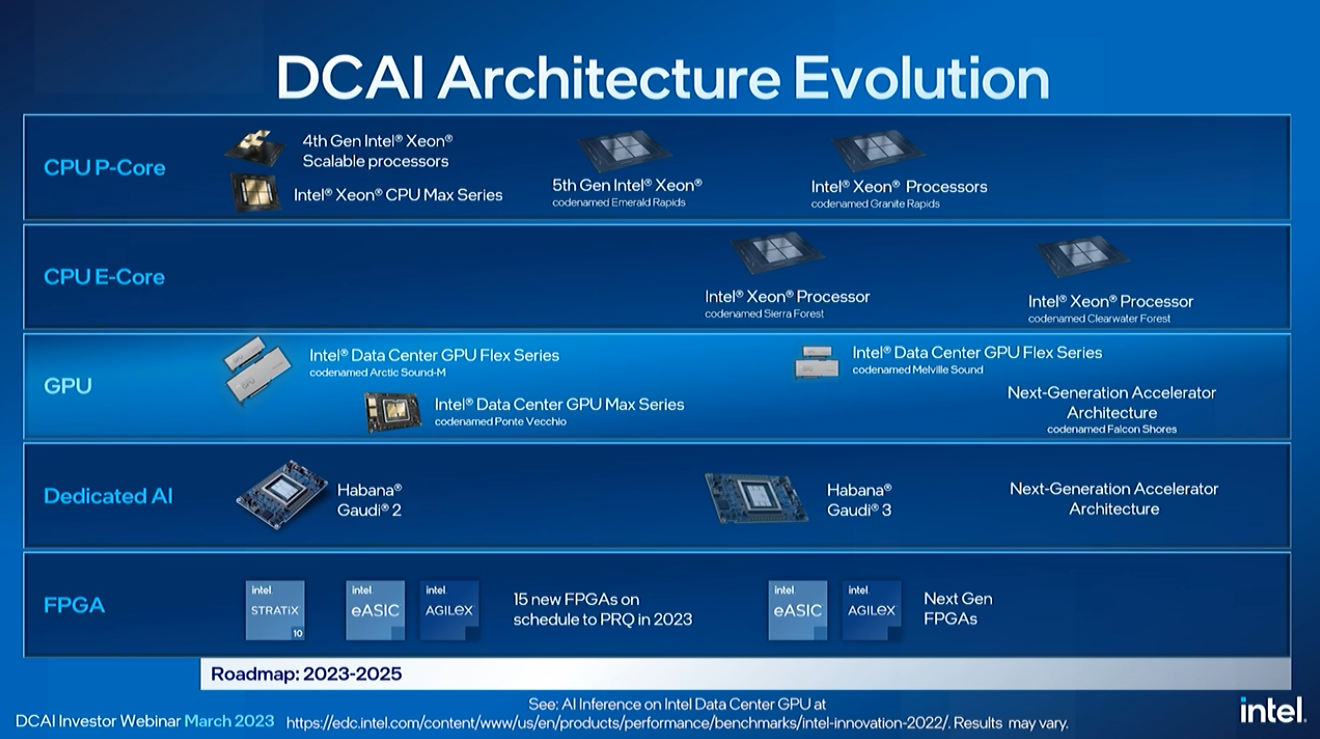

Intel also has a full list of other chips for AI workloads. Intel has noted that it plans to launch 15 new his FPGAs this year. This is his FPGA group record. We have yet to hear of a big win with Gaudi chips, but Intel continues to develop its lineup and has next-gen accelerators on its roadmap. A Gaudi 2 AI accelerator is shipping with Gaudi 3 built into the tape.

Rivera announced Clearwater Forest, the sequel to Sierra Forest. Intel didn’t share many details since its release in the 2025 timeframe but said he would use the 18A process for the chip instead of the 20A process node which arrived half a year ago. This will be his first Xeon chip to adopt the 18A process.

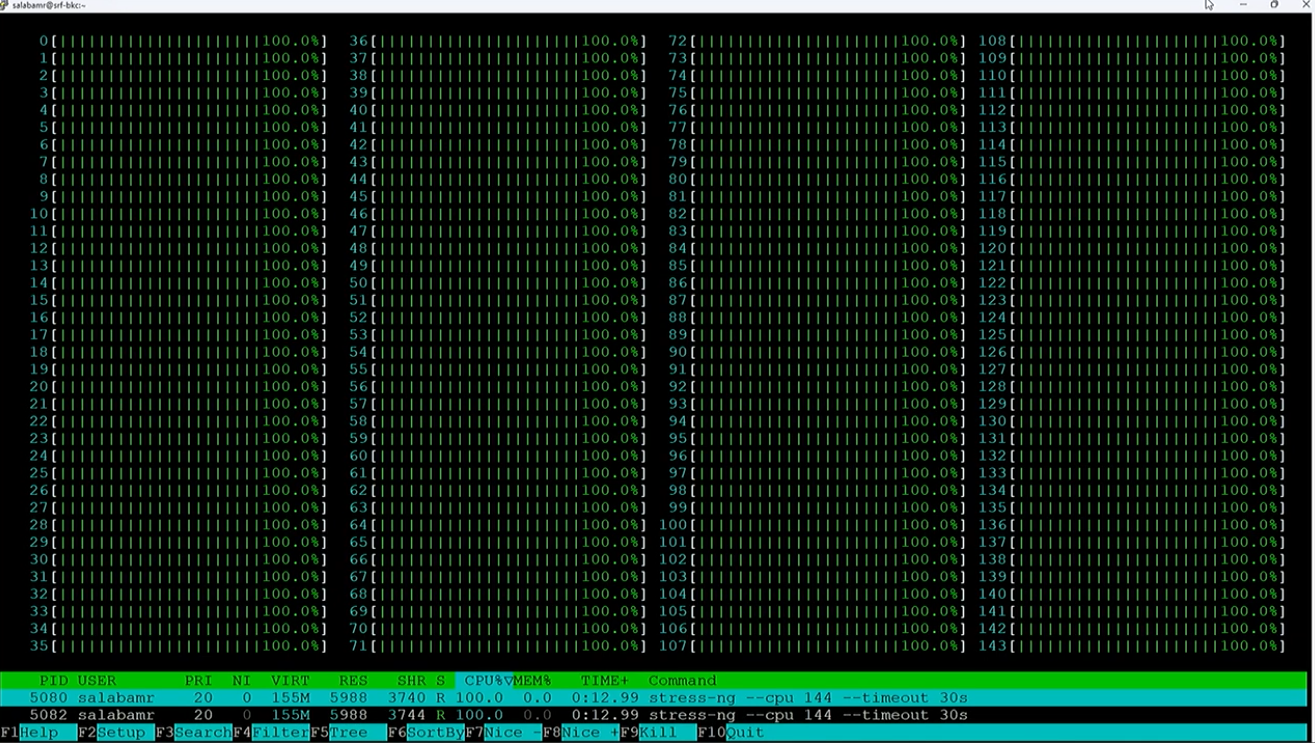

Spelman is back to show us all 144 cores of the Sierra Forest chip in action in the demo.

Intel’s e-core roadmap starts with Sierra Forest with 144 cores offering 256 cores in a single dual socket server. The 5th Gen Xeon Sierra Forest’s 144 cores also beat AMD’s 128-core EPYC Bergamo in core count, but may not lead in thread count. Intel’s e-cores for the consumer market are single-threaded, but the company hasn’t revealed whether its data center e-cores will support hyper-threading. AMD shares that the 128-core Bergamo is hyperthreaded, giving you a total of 256 threads per socket.

Rivera said Intel was able to power up the silicon and boot into the OS within 18 hours (company record). Success is paramount as this chip is the primary vehicle for the “Intel 3” process node. Intel has already sampled the chip to a customer and I believe he demoed all 144 cores in action at the event. Intel is initially aiming his e-core Xeon models at specific types of cloud-optimized workloads, but expects them to be adopted for a much wider range of use cases once they hit the market. increase.

You can see a demo here.

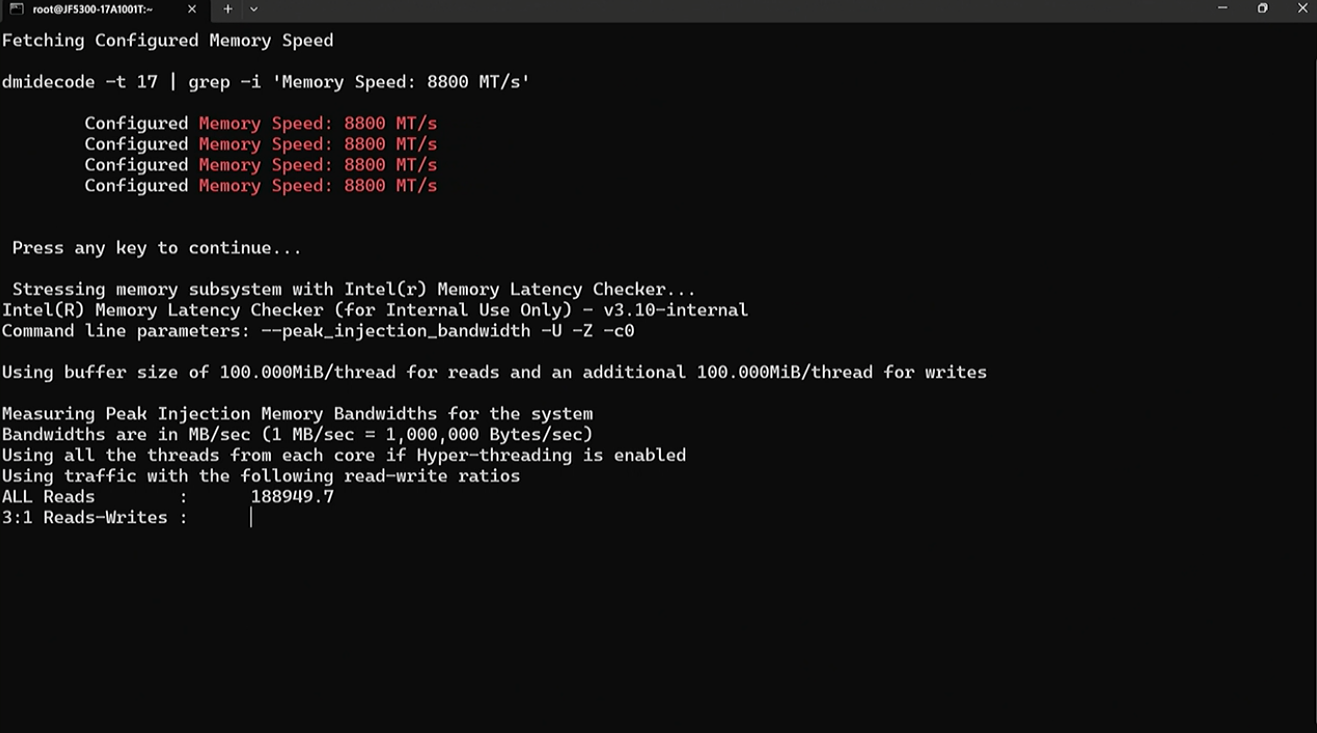

Intel demonstrated a dual-socket Granite Rapids offering 1.5 TB/s of DDR5 memory bandwidth during the webinar. At a high level, Granite Rapids offers more throughput than Nvidia’s 960 GB/s Grace CPU superchip designed specifically for memory bandwidth, and AMD’s dual-socket Genoa with a theoretical peak of 920 GB/s. Provides more throughput than

Intel achieved this feat using DDR5-8800 Multiplexer Combined Rank (MCR) DRAM. This is a new type of bandwidth optimized memory invented by Intel. Intel has already introduced this memory in his SK hynix.

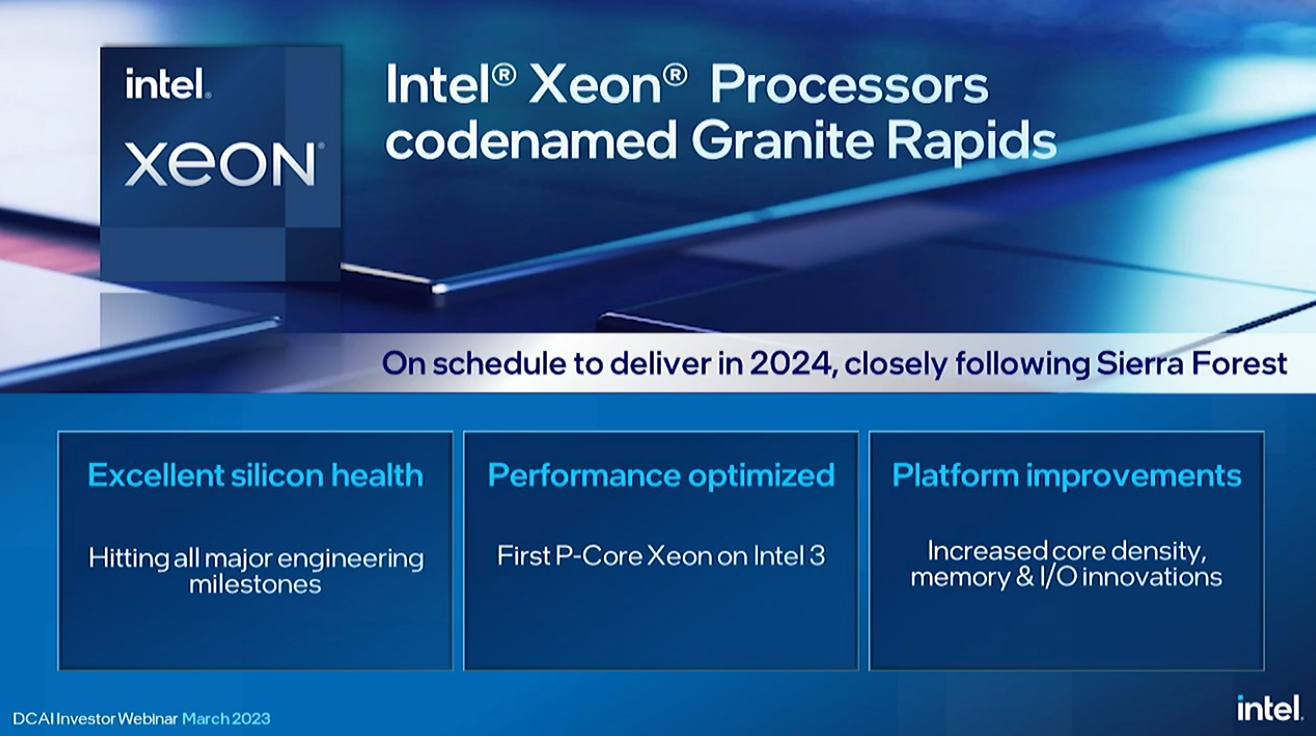

Granite Rapids will arrive in 2024 following the Sierra Forest. Intel plans to manufacture the chip on the “Intel 3” process, a significant improvement over the “Intel 4” process, which lacked the high-density libraries required by Xeon. It’s the first p-core Xeon of the ‘Intel 3’ and features more cores than Emerald Rapids, higher memory bandwidth from DDR5-8800 memory, and other unspecified I/O innovations. This chip is currently sampling to customers.

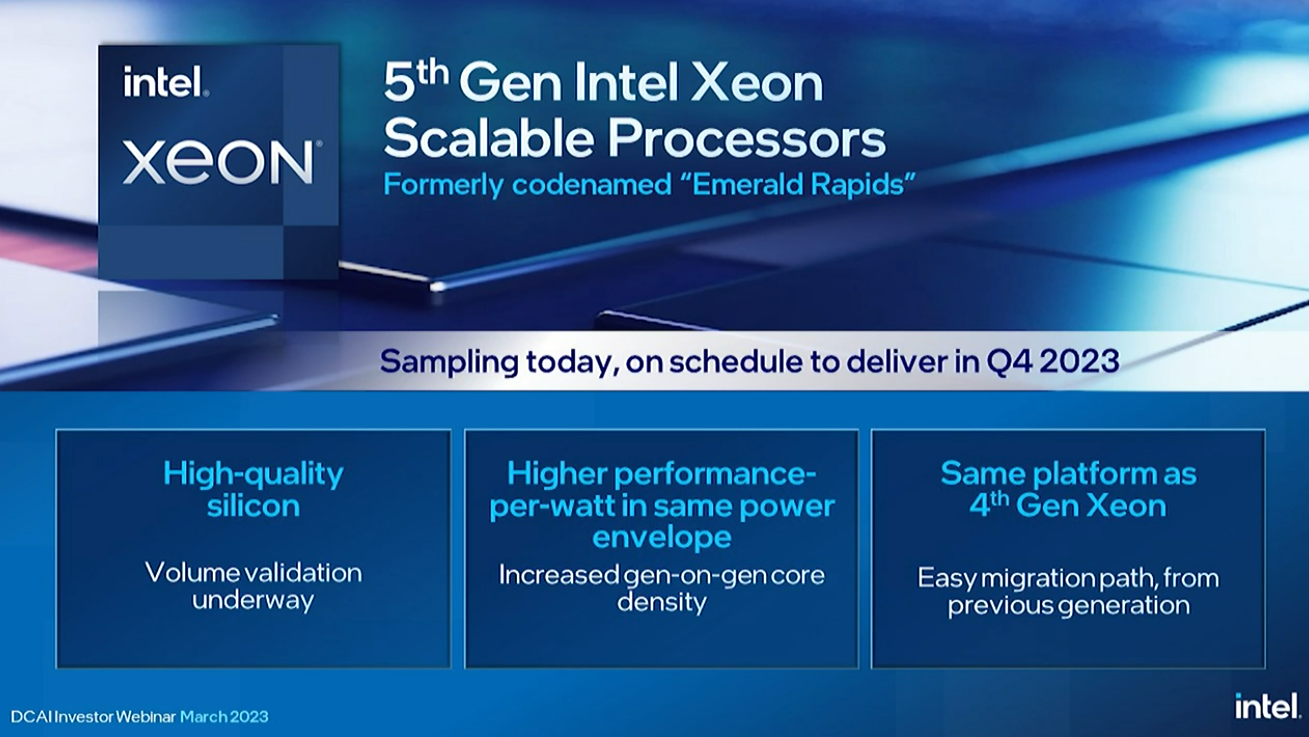

Rivera showed us the company’s upcoming Emerald Rapids chip. Intel’s next-gen Emerald Rapids is set to release in the fourth quarter of this year, which is a compressed timeframe considering Sapphire Rapids launched a few months ago.

Intel says it will offer faster performance, better power efficiency and, more importantly, more cores than its predecessor. Intel has Emerald Rapids silicon in house and says that validation is progressing as expected and the silicon meets or exceeds performance and power targets.

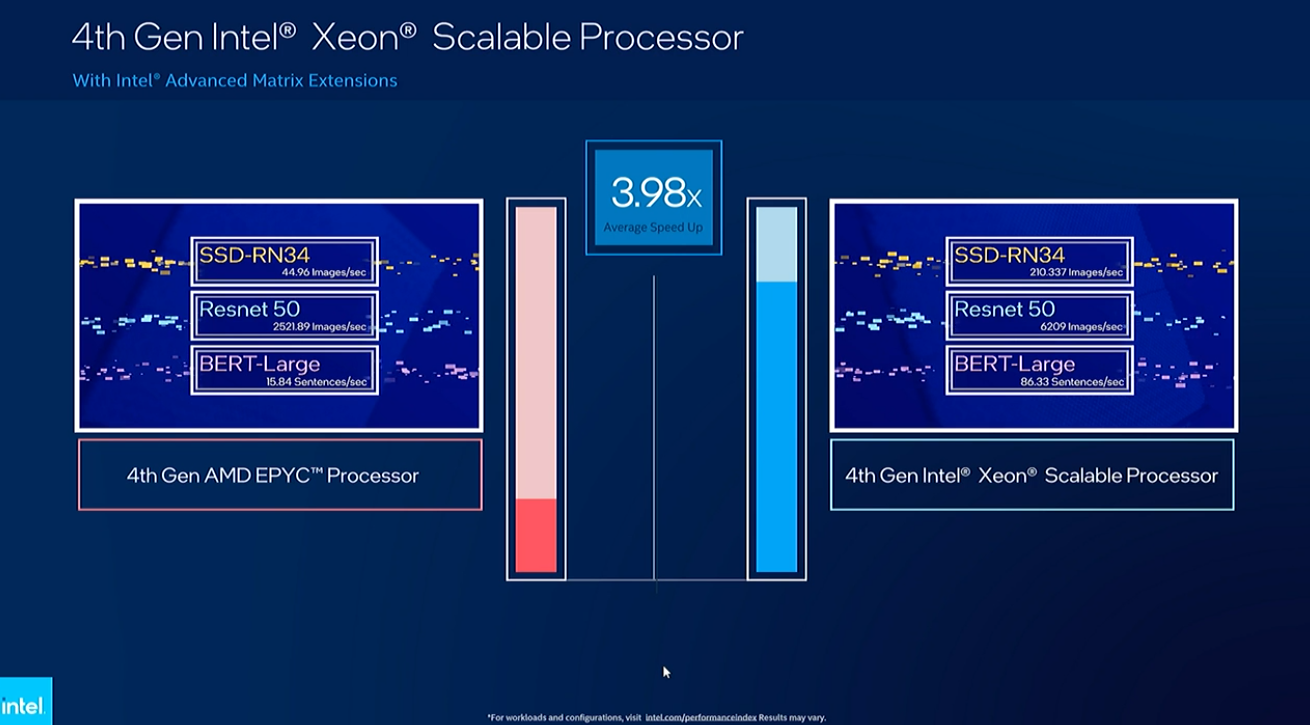

Intel’s Sapphire Rapids supports AI Boost AMX technology that uses various data types and vector processing to improve performance. Lisa Spelman gave a demo showing her 48-core Sapphire Rapids outperforming her 48-core EPYC Genoa by 3.9x on a wide range of AI workloads.

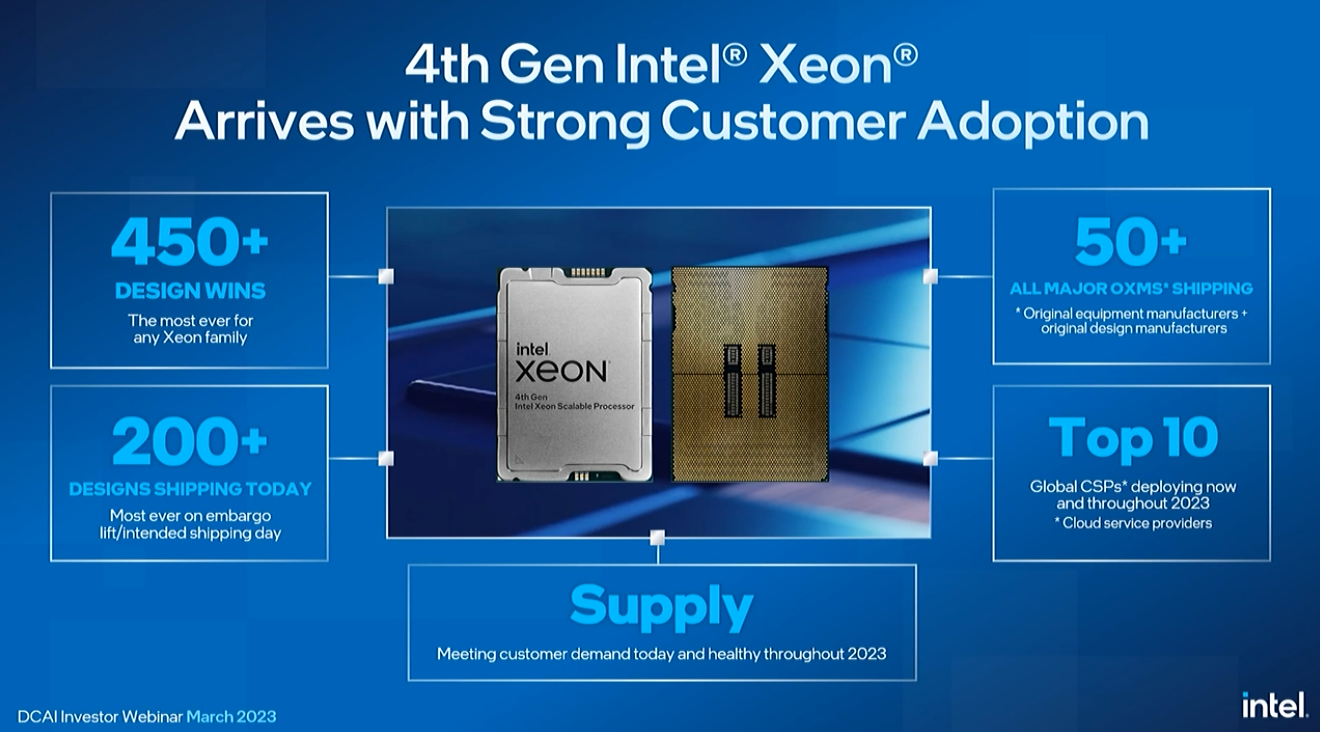

Intel launched Sapphire Rapids, won over 450 designs and shipped over 200 designs from top OEMs. Intel claims efficiency improvements between the 2.9X generations.

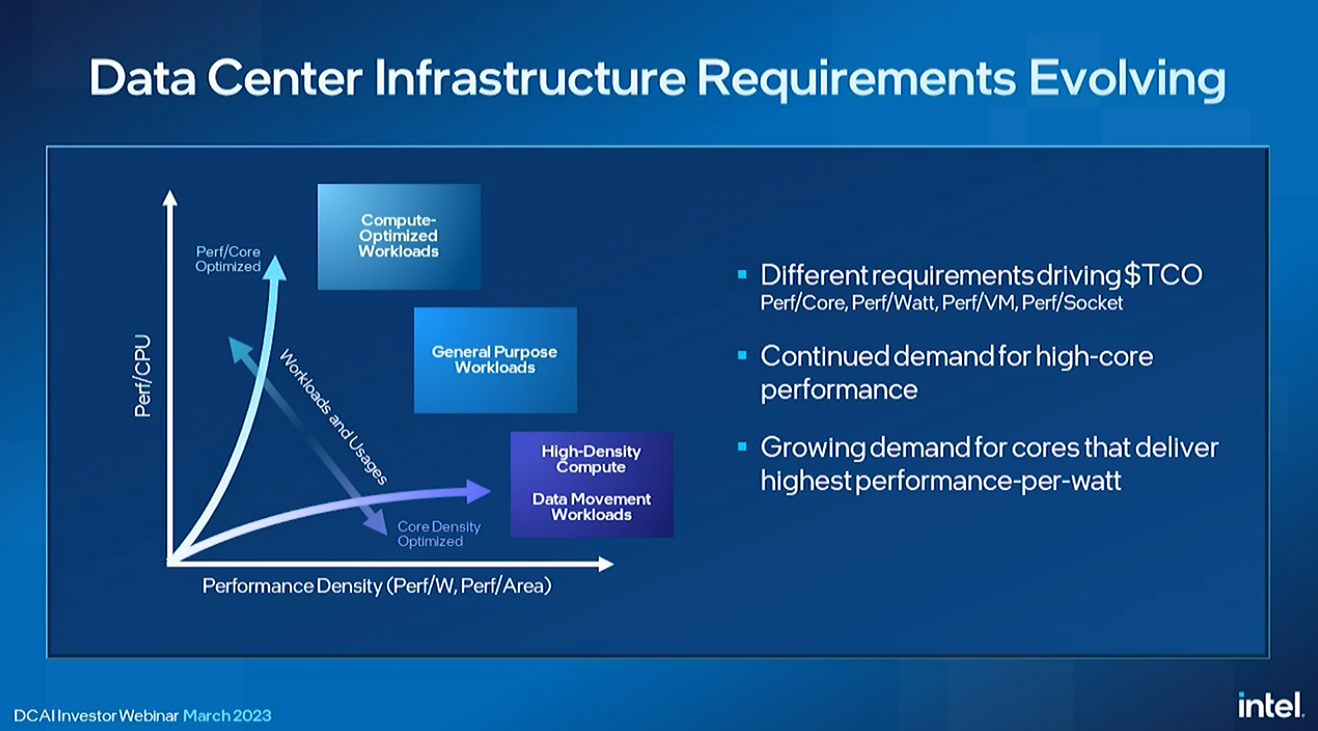

Intel has split its Xeon roadmap into two lines. One uses P cores and the other uses E cores, each with its own advantages. P-Core (Performance Core) models are traditional Xeon data center processors with only cores that offer the full performance of Intel’s fastest architectures. These chips are designed to deliver the highest performance per core and performance for AI workloads. It is also paired with an accelerator, as seen in Sapphire Rapids.

The E-Core (Efficiency Core) lineup, as seen in Intel’s consumer chips, consists of chips with only smaller efficiency cores, and some features such as AMX and AVX-512. Avoid increasing density. These chips are designed to deliver high energy efficiency, core density, and aggregate throughput that are attractive to hyperscalers. Intel’s Xeon processors do not have models with both P-cores and E-cores on the same silicon, so these are different families with different use cases.

The E-core is designed to fight arm competitors.

Intel is committed to developing a broad portfolio of software solutions that complement our chip portfolio.

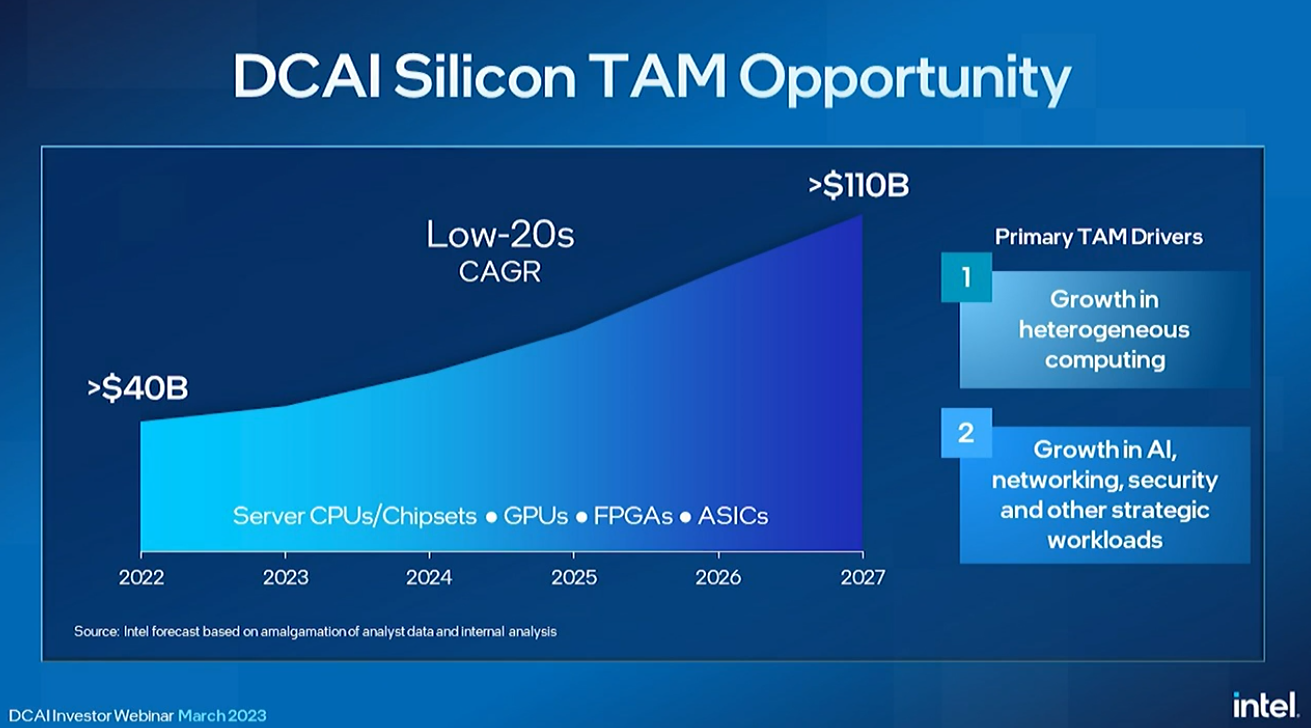

Rivera explained that Intel often looks through the lens of the CPU to measure total data center revenue, but is now expanding that scope to include different types of compute, such as GPUs and custom accelerators. I try to include the

Sandra Rivera takes the stage to outline a new data center roadmap, the total addressable market (TAM) of Intel’s data center business, which she values at $110 billion, and highlighting Intel’s efforts in the AI space I went up.