Nvidia Tech Uses AI to Optimize Chip Designs up to 30X Faster

Nvidia is one of the leading designers of chips used to accelerate artificial intelligence (AI) and machine learning (ML). Therefore, he is undoubtedly one of the pioneers in applying AI to chip design. today, paper (opens in new tab) and blog post (opens in new tab) Revealed how its AutoDMP system uses GPU-accelerated AI/ML optimizations to accelerate state-of-the-art chip floorplans, making them 30x faster than previous methods.

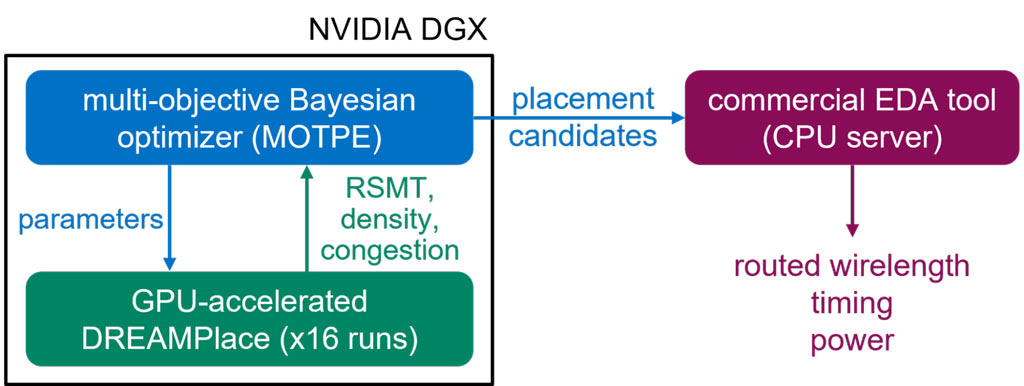

AutoDMP stands for Automated DREAMPlace-based Macro Placement. It is designed to plug into electronic design automation (EDA) systems used by chip designers to accelerate and optimize the time-consuming process of finding the optimal placement of processor building blocks. In one of Nvidia’s AutoDMP working examples, the tool leverages AI for the problem of determining the optimal layout of his 256 RSIC-V cores occupying 2.7 million standard cells and 320 memory macros. Did. AutoDMP took 3.5 hours to find the optimal layout on a single Nvidia DGX Station A100.

The placement of macros has a big impact on the landscape of the chip, “directly impacting many design metrics, such as area and power consumption,” Nvidia said. Placement optimization is a key design task in optimizing chip performance and efficiency, with direct customer impact.

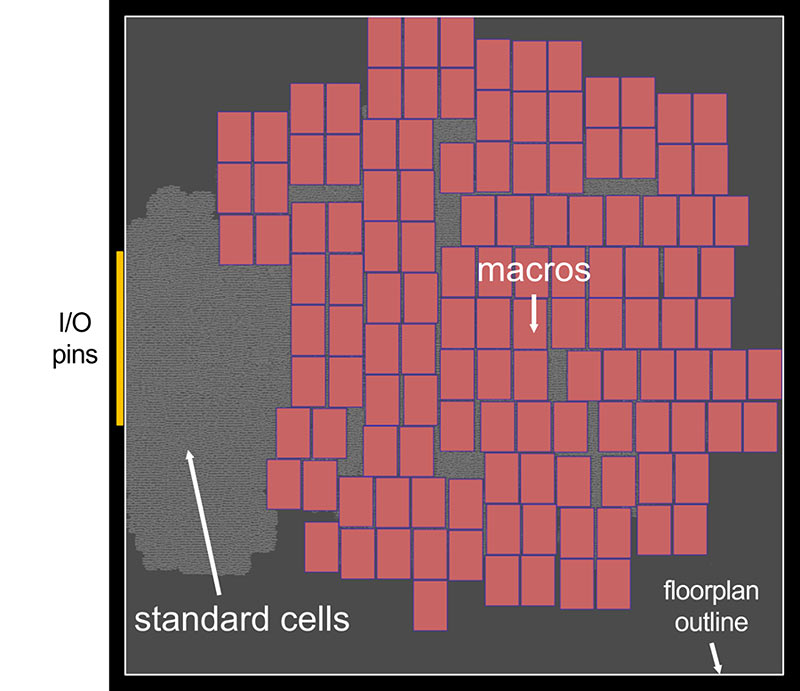

On the topic of how AutoDMP works, Nvidia notes that its analytical placement tool “formulates the placement problem as a wire length optimization problem under placement density constraints and solves it numerically.” says. GPU-accelerated algorithms deliver up to 30x speedup compared to previous placement methods. Additionally, AutoDMP supports mixed-size cells. In the animation above you can see AutoDMP placing macros (red) and standard cells (grey) to minimize wire length in the restricted area.

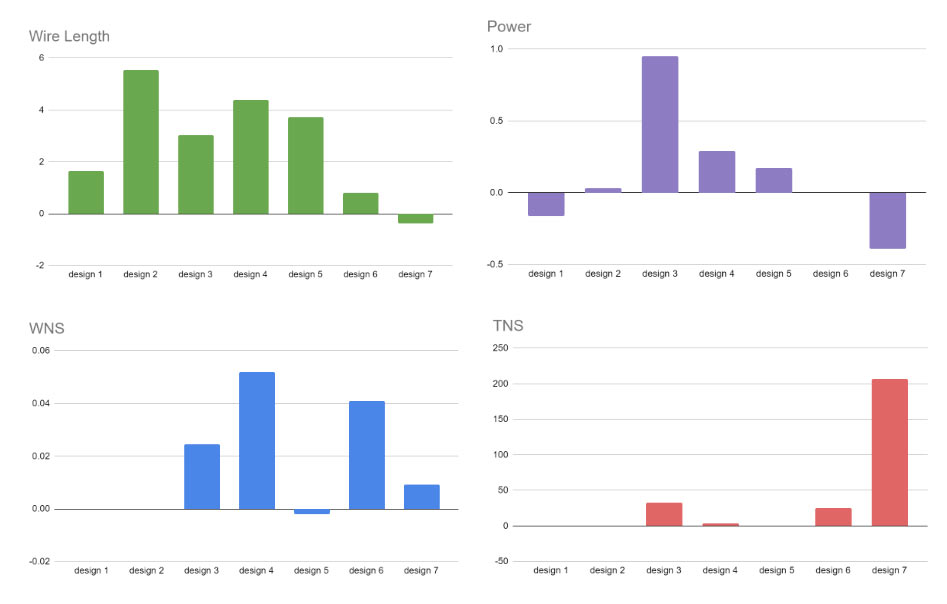

We’ve talked about the design speed benefits of using AutoDMP, but haven’t touched on the qualitative benefits yet. In the figure above, compared to the existing seven alternative designs of test chips, the AutoDMP-optimized chip shows significant improvements in wire length, power, worst negative slack (WNS), and total negative slack (TNS). You will find that it offers advantages. Above the line results show that AutoDMP beats various rival designs.

AutoDMP is open source and the code is publicly available on GitHub.

Nvidia isn’t the first chip designer to leverage AI to optimize layouts. In February we reported on Synopsys and his DSO.ai automation tool. It has already been used on 100 commercial tapeouts. Synopsys described its solution as an “expert engineer in a box.” He added that DSO.ai is perfect for the trending multi-die silicon design, and that using it frees engineers from tedious, repetitive tasks, allowing them to focus their talents on more innovation. .