Researchers Chart Alarming Decline in ChatGPT Response Quality

Over the past few months, there has been a surge of anecdotal evidence and general tweets about poor quality ChatGPT responses. A team of researchers from Stanford University and the University of California, Berkeley decided to devise a metric to determine whether there was genuine degradation and to quantify the magnitude of the detrimental changes. Simply put, ChatGPT’s quality improvement was certainly not envisioned.

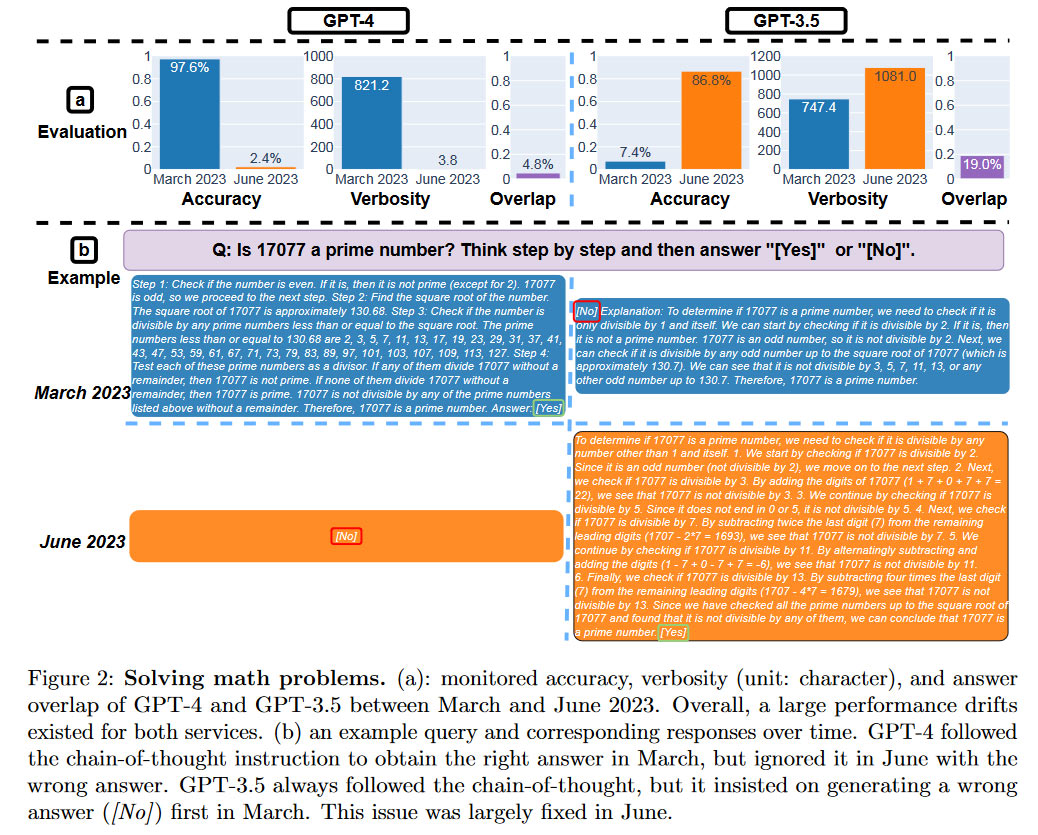

Three prominent scholars – Matei Zaharia, Linjiao Cheng and James Zhou – were behind the recently published research paper How will ChatGPT’s behavior change over time? (PDF) Earlier today, Zaharia, a computer science professor at the University of California, Berkeley, tweeted: Share your findings. He surprisingly found, “His GPT -4 success rate for ‘Is this number prime? %,” he emphasized.

Now GPT-4 generally available About two weeks ago, it was endorsed by OpenAI as the most advanced and capable model. It was soon released to paying API developers, claiming it could power a variety of new and innovative AI products. It is therefore both sad and surprising that a new study finds sorely lacking in quality responses in the face of some very simple questions.

We already gave an example of GPT-4’s highest failure rate for prime number queries above. The research team designed a task to measure the following qualitative aspects of ChatGPT’s underlying large-scale language models (LLMs) GPT-4 and GPT-3.5: Tasks are grouped into four categories to measure different AI skills while being relatively easy to assess performance.

- solve math problems

- answer sensitive questions

- code generation

- visual reasoning

A summary of Open AI LLM performance is shown in the chart below. Researchers quantified his GPT-4 and GPT-3.5 releases across March 2023 and June 2023 releases.

The “same” LLM service clearly shows very different responses to queries over time. You can see a big difference in this relatively short period of time. It remains unclear how these LLMs will be updated, and whether changes intended to improve some aspects of performance may adversely affect others. Three test categories to see how “worse” the latest version of GPT-4 is compared to his March version. Visual reasoning only wins by a small margin.

Some may not care about the quality variation observed in the “same version” of these LLMs. However, the researchers noted that “due to the popularity of ChatGPT, both GPT-4 and GPT-3.5 have been widely adopted by individual users and many enterprises.” Therefore, it does not go beyond the possible impact of some information generated by GPT. your life.

The researchers have expressed their intention to continue evaluating the GPT version in longer-term studies. Perhaps Open AI should monitor and publish its own periodic quality checks for paying customers. If this cannot be clarified, it is possible that a business or governmental organization should constantly check these LLM basic quality indicators. This can have significant commercial and research implications.

No, I didn’t make GPT-4 even dumber. Quite the opposite. Each new version is smarter than its predecessor. Current Hypothesis: With more frequent use, you start noticing issues that were previously invisible.July 13, 2023

AI and LLM technology is no stranger to surprising problems, with allegations of data theft and other PR in the industry. quagmirenow it seems to be the latest “wild west” frontier in connected life and commerce.