a modern open source data stack for blockchain

1. Challenges of the Modern Blockchain Data Stack

There are some challenges that modern blockchain indexing startups can face.

- lots of data. As the amount of data on the blockchain grows, the data index needs to scale up to handle the increased load and provide efficient access to the data. This results in higher storage costs, slower metric calculations, and increased load on the database server.

- Complex data processing pipelines. Blockchain technology is complex, and building a comprehensive and reliable data index requires a deep understanding of the underlying data structures and algorithms. The diversity of blockchain implementations inherits that. To give a concrete example, his NFTs on Ethereum are usually created within smart contracts according to ERC721 and ERC1155 formats. By contrast, Polkadot implementations, for example, are typically built directly within the blockchain runtime. They should be considered NFTs and stored as such.

- integration capabilities. To provide maximum value to users, a blockchain indexing solution may need to integrate its data index with other systems such as analytics platforms and APIs. This is difficult and requires a lot of architectural design effort.

As blockchain technology has become more popular, the amount of data stored on blockchains has increased. This is because more and more people are using this technology and each transaction adds new data to the blockchain. Additionally, blockchain technology has evolved from simple money transfer applications involving the use of Bitcoin to more complex applications involving the implementation of business logic within smart contracts. These smart contracts can generate large amounts of data, contributing to the increasing complexity and size of blockchains. Over time, this has led to larger and more complex blockchains.

In this article, we review the evolution of Footprint Analytics’ technology architecture step-by-step as a case study and explore how the Iceberg-Trino technology stack addresses on-chain data challenges.

Footprint Analytics has indexed approximately 22 public blockchain data, 17 NFT marketplaces, 1900 GameFi projects, and over 100,000 NFT collections into a semantic abstraction data layer. It is the world’s most comprehensive blockchain data warehouse solution.

Data analysts frequently query blockchain data, which contains more than 20 billion rows of records of financial transactions. This is different from traditional data warehouse ingress logs.

Three major upgrades have been made in the last few months to meet growing business requirements.

2. Architecture 1.0 BigQuery

At the start of footprint analysis, google big query As a storage and query engine. BigQuery is a great product. It’s extremely fast and easy to use, offering dynamic math capabilities and a flexible UDF syntax so you can get your work done quickly.

However, BigQuery also has some problems.

- The data is uncompressed, which makes it costly, especially when storing raw data for more than 22 blockchains for footprint analysis.

- Poor concurrency: Bigquery only supports 100 concurrent queries. This is not suitable for high concurrency scenarios for footprint analytics serving a large number of analysts and users.

- Lock in with Google Bigquery, a closed source product.

So I decided to look into other alternative architectures.

3. Architecture 2.0 OLAP

We were very interested in some of the very popular OLAP products. OLAP’s most compelling advantage is query response time. It typically takes less than a second to return query results for large amounts of data and can support thousands of concurrent queries.

We chose one of the best OLAP databases. Doris,try it. This engine works well. But at some point I soon ran into other problems.

- Data types such as arrays and JSON are not yet supported (November 2022). Arrays are a common type of data in some blockchains. for example, topic field in the evm log. The inability to compute with arrays directly impacts the ability to compute many business metrics.

- Limited support for DBT and merge statements. These are common requirements for data engineers in ETL/ELT scenarios who need to update newly indexed data.

That said, we couldn’t use Doris for our entire production data pipeline. So, using Doris as her OLAP database to act as a query engine and try to solve some of the problems in the data production pipeline to provide fast and advanced services. Concurrent query capability.

Unfortunately, we couldn’t replace Bigquery with Doris, so we had to use Bigquery as our query engine and sync data from Bigquery to Doris on a regular basis. There were some issues with this sync process. One of them was that update writes quickly piled up when the OLAP engine was busy serving queries to front-end clients. After that, the writing process slowed down, syncing took a long time, and sometimes even failed to complete.

We realized that OLAP could solve some of the problems we face, but it could not be a turnkey solution for footprint analysis, especially when it comes to data processing pipelines. Our problem was larger and more complex, and I would say that his OLAP as query engine alone was not enough.

4. Architecture 3.0 Iceberg + Torino

Welcome to Footprint Analytics Architecture 3.0. It’s a complete overhaul of the underlying architecture. We redesigned the entire architecture from the ground up, separating data storage, computation, and querying into three distinct parts. We take lessons from two previous architectures for footprint analysis and learn from the experiences of other successful big data projects such as Uber, Netflix, and Databricks.

4.1. Introduction to data lakes

Our first focus was on data lakes, a new type of data storage for both structured and unstructured data. Data lakes are ideal for on-chain data storage, as the on-chain data format can range from unstructured raw data to structured abstract data. We expected to use a data lake to solve our data storage problem. Ideally, it would also support mainstream computing engines such as Spark and Flink, making it painless to integrate with different types of processing engines as footprint analysis evolves. .

Iceberg is very well integrated with Spark, Flink, Trino, and other computational engines, allowing you to choose the best computation for each metric. for example:

- If you need complex computational logic, Spark is your best bet.

- Flink for real-time computation.

- Use Trino for simple ETL tasks that can be done using SQL.

4.2. Query Engine

Iceberg solved our storage and computational problems, so we had to consider our choice of query engine. There are not many options available.The alternatives we considered are

The most important consideration before digging deeper was that future query engines must be compatible with the current architecture.

- To support BigQuery as a data source

- Supports DBT and relies on generation of many indicators

- To support the BI tool metabase

Based on the above, I chose Trino, which has very good Iceberg support. The team responded very quickly and I reported the bug. This was fixed the next day and released to the latest version the following week. This was the best choice for our footprint team who also needed high implementation responsiveness.

4.3. Performance test

Once we decided on a direction, we did some performance testing with the Trino + Iceberg combination to see if it could meet our needs. To my surprise, the query was incredibly fast.

Knowing that Presto + Hive has been the worst comparison in the OLAP hype for years, the Trino + Iceberg combination completely blew our minds.

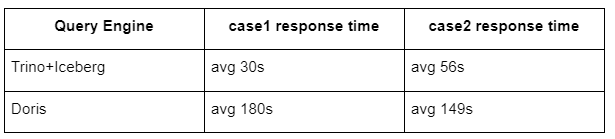

Here are the results of our tests.

Case 1: Joining large datasets

800 GB Table 1 joins another 50 GB Table 2 to perform complex business calculations

Case 2: Run separate queries with a single large table

Test SQL: select Distinct(address) from table group by day

The Trino+Iceberg combination is about three times faster than Doris with the same configuration.

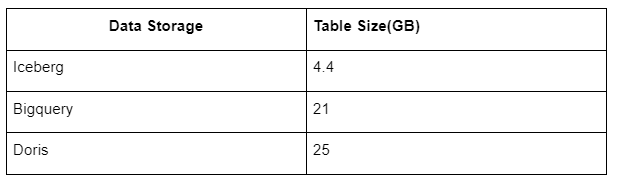

In addition, Iceberg can use data formats such as Parquet, ORC, etc., which store data in compression, so there is another surprise. Iceberg’s table storage takes up about 1/5th the space of other data warehouses The storage sizes for the same table in the three databases are:

Note: The above tests are examples encountered in real production and are for reference only.

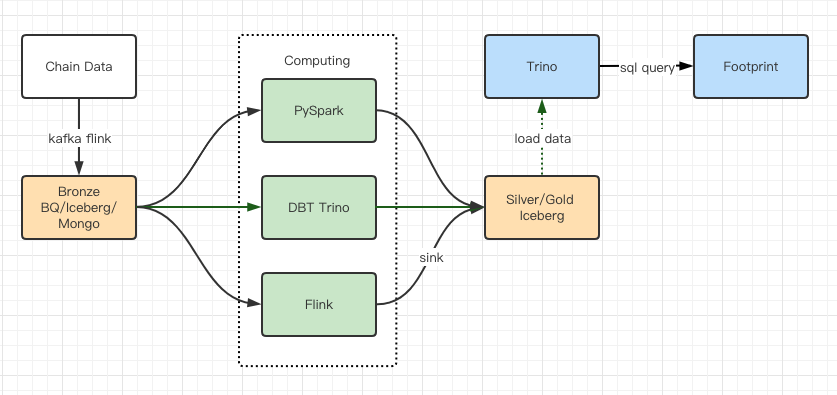

4.4. Upgrade Effects

A performance test report showed good enough performance that it took the team about two months to complete the migration. Here is a diagram of the architecture after the upgrade.

- Multiple computer engines match our different needs.

- Trino supports DBT and can query Iceberg directly, eliminating the need to handle data synchronization.

- Trino + Iceberg’s amazing performance allows us to open up all the Bronze data (raw data) to our users.

5. Summary

Since its inception in August 2021, the Footprint Analysis team has successfully completed three projects in less than a year and a half, thanks to its strong desire and determination to bring the benefits of the best database technology to crypto users, as well as its solid execution of implementation and implementation. Architecture upgrade completed. Upgrade the underlying infrastructure and architecture.

Footprint Analytics Architecture Upgrade 3.0 provides a new experience for users, empowering users with different backgrounds to gain insights across a wider variety of usages and applications.

- Built with Metabase BI tools, Footprint allows analysts to access decoded on-chain data, explore with complete freedom of choice (no code or hardcode), query the entire history, and Makes it easy to cross-examine sets and gain insights. no time.

- Integrate both on-chain and off-chain data into analytics across web2+web3.

- By building/querying metrics on top of Footprint’s business abstraction, analysts or developers can save 80% of the time on repetitive data processing tasks and generate meaningful business-based metrics, surveys, and products. Focus on your solutions.

- Seamless experience from Footprint Web to REST API calls, all based on SQL

- Support investment decisions with real-time alerts and actionable notifications on critical signals